Table of Contents

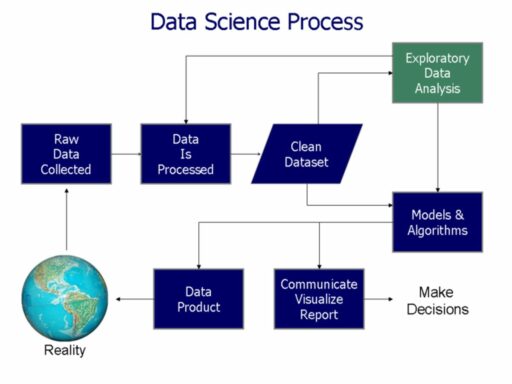

In the digital age, the ability to manipulate and manage data effectively is a critical skill for professionals across various industries. The article ‘Mastering the Art of Data Manipulation: Techniques and Tools for Effective Data Management’ aims to equip readers with the knowledge and tools necessary to handle data with confidence. From utilizing Pandas for efficient data manipulation to understanding the broad spectrum of data wrangling tools, this article covers essential techniques and best practices to streamline your data management processes.

Key Takeaways

- Leverage Pandas for efficient manipulation of DataFrame and Series objects to handle and analyze data effectively.

- Assess data wrangling needs considering volume, complexity, sources, automation, skill level, and system compatibility.

- Understand the categories of data wrangling tools, from spreadsheets to advanced data management systems for scalability.

- Utilize tutorials, online courses, and community forums to enhance your data manipulation skills through hands-on practice.

- Ensure scalability in data processes by adopting tools and techniques that grow with your organization’s data volume.

Efficient Data Manipulation with Pandas

Series Manipulation

Pandas Series is a foundational tool for data manipulation in Python, offering a versatile one-dimensional array for various data types. Mastering Series manipulation is crucial for efficient data analysis. A Series can be created from a list, dict, or even a DataFrame column, and it comes with a plethora of methods to perform operations such as sorting, filtering, and replacing values.

For example, consider the following operations on a Series object:

.head()and.tail()to view the first and last elements.sort_values()to organize data.apply()to apply a function to each item.value_counts()to count unique values

Embracing these methods enhances your ability to quickly and effectively reshape and prepare data for analysis.

Understanding and utilizing the capabilities of Pandas Series is a stepping stone to more complex data manipulation tasks. As you progress, you’ll find that these skills are transferable to DataFrame operations, setting a strong foundation for any data-driven project.

Creating Columns

Adding new columns to a DataFrame is a critical step in structuring your data for analysis. This process allows you to enrich your dataset with new information or to transform existing data for better insight. For instance, you might add a calculated column based on other columns, or you might categorize continuous data into discrete bins.

Here are common methods for creating columns in Pandas:

- Using assignment to create a new column directly.

- Applying a function across rows or columns to generate a new column.

- Combining multiple columns to create a composite column.

Remember, the key to effective data manipulation is not just adding columns, but ensuring they add value to your analysis.

When creating columns, consider the following:

- The purpose of the new column and how it will be used.

- Data types and ensuring consistency across your DataFrame.

- Potential impacts on data volume and performance.

Summary Statistics

Mastering summary statistics is essential for any data professional. Understanding the central tendencies and variability in your data sets the stage for deeper analysis. Summary statistics provide a quick snapshot of the data’s overall shape and patterns, which can be critical for decision-making.

Here are some of the key summary statistics that one should be familiar with:

- Mean: The average value

- Median: The middle value in a sorted list

- Mode: The most frequently occurring value

- Standard Deviation: A measure of the amount of variation or dispersion

- Variance: The expectation of the squared deviation of a random variable from its mean

- Percentiles: Values below which a certain percentage of observations fall

By regularly employing these statistics, you can identify outliers, understand distribution, and make informed predictions. They are the building blocks for more complex analyses, such as regression or time-series forecasting.

Grouping, Pivoting, and Cross-Tabulation

Mastering the techniques of grouping, pivoting, and cross-tabulation in data manipulation can significantly enhance your ability to uncover patterns and relationships within your data. Grouping allows you to categorize data based on shared attributes, enabling targeted analysis on subsets of your dataset. Pivoting, on the other hand, transforms data to provide a new perspective, often making it easier to compare variables or time periods.

Cross-tabulation is a method to quantitatively analyze the relationship between multiple variables. By creating a matrix that displays the frequency distribution of variables, it becomes possible to observe the interactions between different data segments. Here’s a simple example of a cross-tabulation table:

| Product Type | Sales Q1 | Sales Q2 |

|---|---|---|

| Electronics | 1200 | 1500 |

| Apparel | 800 | 950 |

When used effectively, these techniques can reveal complex data structures and trends that might otherwise remain hidden. They are essential for any data professional looking to deepen their understanding of their data’s story.

It’s important to approach these methods with a clear strategy, considering the specific questions you aim to answer and the best way to structure your data to facilitate those insights. Whether you’re working with sales figures, customer demographics, or operational metrics, grouping, pivoting, and cross-tabulation can be powerful allies in your data manipulation arsenal.

Understanding Your Data Wrangling Needs

Assessing Data Volume and Complexity

Understanding the volume and complexity of your data is a foundational step in data manipulation. The sheer amount of data generated from various sources like social media, IoT devices, and online transactions can be overwhelming. It’s essential to evaluate not just the quantity but the diversity of data types and structures you’re dealing with.

- Volume: Refers to the sheer amount of data that needs to be processed.

- Variety: The different types of data, ranging from structured to unstructured.

- Complexity: The intricacies involved in data relationships, quality, and transformation requirements.

Effective data wrangling is not just about managing large volumes; it’s about transforming complex data into actionable insights.

Scalability is another critical aspect. As your organization grows, so does the data. Ensuring that your data wrangling processes can scale accordingly is vital to maintain data integrity and avoid insights quality degradation. Begin by identifying the most relevant data and questions, and then consider the tools and skills required for efficient data management.

Identifying Data Sources and Integration

In the realm of data management, identifying and integrating data sources is a pivotal step towards a robust analytical framework. Data often resides in isolated silos within different departments, making it essential to not only recognize these sources but also to ensure their accuracy and relevance. The process involves standardizing formats, resolving discrepancies, and merging datasets to form a unified view that is crucial for in-depth analysis.

To streamline this process, consider the following steps:

- Determine the most relevant data and questions based on your needs.

- Assess the volume and variety of data to avoid overwhelming your teams.

- Identify the necessary tools, skills, and strategies for effective business intelligence integration.

Ensuring data quality is paramount. Implement regular data cleaning, validation, and enrichment processes to maintain data accuracy, completeness, and timeliness.

Integrating data from multiple sources, such as IoT devices, social media, and enterprise systems, can strain resources but is essential for comprehensive analysis. This integration allows for a more holistic view of the business landscape, which is indispensable for accurate and insightful analyses.

Automated vs. Bespoke Solutions

In the realm of data manipulation, the choice between automated and bespoke solutions is pivotal. Automated tools are designed to handle repetitive tasks efficiently, often incorporating AI and machine learning to adapt and improve over time. Bespoke solutions, on the other hand, are custom-built to meet specific needs, offering tailored functionality that automated tools may not provide.

-

Automation Advantages:

- Speed and consistency in data processing

- Reduced human error

- Scalability for large data sets

-

Bespoke Benefits:

- Customization to unique requirements

- Flexibility to adapt to changes

- Integration with specialized systems

While automated solutions can significantly accelerate data manipulation tasks, they may not always capture the nuanced needs of complex data scenarios. Bespoke solutions fill this gap by providing the precision and adaptability necessary for intricate data landscapes.

It’s essential to weigh the pros and cons of each approach in the context of your organization’s data strategy. The best data manipulation software, such as SolveXia, assists companies in collecting data through standard reporting and the creation of custom reports, highlighting the convergence of automation and customization.

Skill Level and System Compatibility

When embarking on data manipulation tasks, it’s crucial to consider the skill level required and the compatibility with existing systems. For individuals and organizations alike, aligning the complexity of data tasks with the proficiency of the team ensures efficiency and accuracy.

- Assess the team’s familiarity with data manipulation languages like SQL, Python, or R.

- Determine if the current infrastructure supports the necessary tools and software.

- Evaluate the learning curve associated with new tools and the availability of training resources.

Ensuring that the data manipulation tools are compatible with your system’s architecture is essential for seamless integration and optimal performance.

Furthermore, it’s important to stay informed about the latest tools and techniques in data management. Regularly visiting educational websites, such as the SethT website, can provide valuable insights on data storage and efficient data collection strategies.

Categories of Data Wrangling Tools

Spreadsheet and Database Applications

In the realm of data manipulation, spreadsheet and database applications serve as the foundational tools for many professionals. Spreadsheets, like Microsoft Excel or Google Sheets, are accessible and straightforward, ideal for simple data tasks such as filtering, sorting, and basic computations. They offer advanced functions, pivot tables, and basic scripting to automate tasks, making them a staple in data management.

For those seeking to delve deeper into data relationships and manipulation, databases come into play. SQL databases, in particular, allow for the seamless integration and analysis of tabular data. Users can transfer data between SQL databases and Excel using CSV files, which is a critical skill for ensuring data integrity and facilitating complex analyses.

To further enhance data manipulation capabilities, one must develop a strong foundational understanding of relational databases and SQL. This proficiency is not only about executing queries but also about emphasizing the significance of proper planning and data relationships. Advanced SQL techniques and familiarity with relational databases like PostgreSQL are essential for intermediate to advanced users.

By mastering these tools, data professionals can ensure that their data processes are scalable and robust, ready to meet the challenges of an ever-evolving data landscape.

Data Manipulation and Transfer Techniques

In the realm of data management, efficient transfer and manipulation of data are pivotal to maintaining the integrity and utility of information. Data manipulation and transfer techniques encompass a broad range of operations, from simple tasks like selecting specific data ranges to more complex transformations.

For instance, implementing in-place operations is a delicate task that involves modifying data while preserving its original structure and meaning. This can include operations such as transposition, rotation, and reshaping of data sets. Seamless integration and analysis of tabular data are facilitated by transferring data between SQL databases and Excel using CSV files, which is a common practice in many organizations.

Mastery of data manipulation techniques is not only about understanding the tools but also about recognizing the appropriate method for each unique data scenario.

Understanding these techniques is crucial for anyone looking to unlock the power of data manipulation. Sorting and filtering data are two powerful techniques that can help you extract meaningful insights and should be part of any data professional’s skill set.

Foundational and Intermediate SQL Proficiency

Mastering SQL is a critical skill for effective data manipulation. Develop a strong foundational understanding of relational databases and SQL to unlock the potential of data analysis. Repeated practice with SQL queries is essential for achieving intermediate fluency and preparing for more advanced data manipulation techniques.

Emphasizing the significance of proper planning and data relationships is crucial. This understanding forms the backbone of efficient data management and allows for more sophisticated analysis and insights.

Advanced SQL techniques go beyond the basics, offering powerful ways to enhance data manipulation and analysis capabilities. Familiarity with relational database management systems like PostgreSQL is invaluable, providing deeper insights into data structuring and retrieval. Here’s a quick overview of key SQL concepts to master:

- SQL Syntax and Query Structure

- Join Operations and Data Relationships

- Subqueries and Nested Queries

- Aggregate Functions and Grouping Data

- Indexing and Performance Optimization

Scalability and Advanced Data Management

As the volume and variety of data continue to expand, the need for scalable data management solutions becomes increasingly critical. Organizations must adapt their data wrangling tools and processes to handle the influx of data from diverse sources such as the internet, social media, and IoT devices.

Advanced data management is not just about handling more data; it’s about enabling more sophisticated analyses. Tools that facilitate advanced analytics and machine learning are essential for extracting valuable insights from large datasets. These tools often come with features that support collaboration and efficiency, such as shared workspaces, version control, and the ability to track and review changes.

The integration of data wrangling tools with advanced analytics capabilities, including predictive modeling and machine learning algorithms, is a game-changer. It allows for a seamless transition from data preparation to insightful analysis, ensuring that businesses can make faster, informed decisions in a competitive landscape.

To illustrate the importance of scalability and advanced features in data management tools, consider the following table outlining key aspects:

| Aspect | Importance |

|---|---|

| Volume Handling | Ensures management of large datasets |

| Variety Handling | Accommodates different data types and sources |

| Advanced Analytics | Enables sophisticated data analyses |

| Machine Learning Integration | Facilitates predictive modeling and insights |

| Collaboration Features | Supports teamwork and efficiency |

By mastering these aspects, organizations can ensure that their data management processes remain robust and agile, even as the data landscape evolves.

Best Practices and Learning Resources

Tutorials and Online Courses

In the journey to data mastery, online tutorials and courses are invaluable resources for both beginners and experienced practitioners. Websites like Simplilearn and SethT offer a diverse range of courses tailored to data wrangling, Python, R, and data visualization tools. These platforms often provide a self-paced model of education, allowing learners to balance their studies with other commitments.

Learners can also benefit from structured learning paths, such as:

- Certification Courses

- Live Virtual Classroom Training

- Self-Paced Learning Modules

Embracing a continuous learning mindset and leveraging these online resources can significantly accelerate your data manipulation skills.

It’s important to engage with the community through forums and to apply new skills to real-world datasets, which can be found on platforms like Kaggle. This hands-on approach is crucial for reinforcing learning and gaining practical experience.

Documentation and Community Forums

The journey to data manipulation mastery is greatly aided by the wealth of documentation and community forums available. These resources provide a treasure trove of knowledge, from basic concepts to advanced techniques.

- Documentation serves as the official guide to tools and languages, detailing functionalities, syntax, and best practices.

- Community forums are platforms where practitioners share experiences, solve problems, and offer insights. They are invaluable for staying updated with the latest trends and solutions.

Embrace these resources as they are instrumental in overcoming challenges and enhancing your data manipulation skills.

Remember, the key to leveraging these resources effectively is to actively engage with them. Ask questions, contribute answers, and participate in discussions to deepen your understanding and build a professional network within the data community.

Hands-On Practice with Real Data

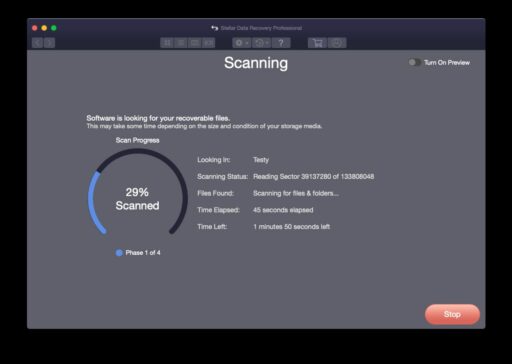

The true test of data manipulation skills comes when working with real-world datasets. It’s essential to apply the techniques learned from tutorials and courses to actual data to solidify your understanding and gain practical experience. Platforms like Kaggle offer a plethora of datasets across various domains, providing an excellent opportunity for hands-on practice. Here are some steps to get started:

- Choose a dataset that aligns with your interests or professional needs.

- Define a clear objective for your analysis or project.

- Clean and preprocess the data using the skills you’ve acquired.

- Perform exploratory data analysis to uncover patterns and insights.

- Share your findings with the community or stakeholders.

By engaging with real data, you not only enhance your technical skills but also develop a keen sense for data-driven decision making.

Remember, the journey to mastery involves continuous learning and application. Seek out datasets that challenge you and push the boundaries of your current skill set. Whether it’s for a personal project or a professional assignment, using real data for many scales can provide a comprehensive understanding of data manipulation in practice.

Transformation and Data Manipulation Operations

Mastering data manipulation operations is essential for effective data management. These operations include a variety of techniques that are crucial for transforming raw data into a format that is suitable for analysis. Among the most common techniques are data smoothing, attribution construction, data generalization, data aggregation, and data discretization.

Implementing in-place operations requires a careful balance between modifying the original data and maintaining the integrity of the information being processed.

Understanding the differences between data wrangling and ETL (Extract, Transform, Load) is also vital. Here’s a comparative overview:

| Feature | Data Wrangling | ETL |

|---|---|---|

| Definition | Cleaning, structuring, and enriching raw data | Extracting, transforming, and loading data |

| Primary Objective | Making data more suitable for analysis | Managing and preparing data for BI and analytics |

It’s important to note that while both data wrangling and ETL involve transformation operations, their approaches, tools, and objectives can differ significantly.

Data & Databases Training for Mastery

Comprehensive Data Visualization Tools

In the realm of data science, the ability to present data in a clear and impactful way is just as crucial as the ability to analyze it. Interactive visualizations have become a cornerstone of modern data presentation, allowing users to engage with data through dynamic plots and dashboards. Tools like Microsoft Power BI and Tableau lead the market, offering robust features for creating interactive narratives from complex datasets.

Advanced data visualization techniques not only enhance the interpretation of data but also aid in uncovering underlying patterns and trends.

When selecting a visualization tool, it’s important to consider the specific needs of your project. For instance, Plotly and Seaborn are excellent for creating engaging visualizations, while libraries like LightGBM and XGBoost are better suited for handling large datasets with high dimensionality. Below is a list of some of the top data visualization software of 2024, as highlighted by Forbes Advisor:

- Microsoft Power BI

- Tableau

- Qlik Sense

- Klipfolio

- Looker

- Zoho Analytics

- Domo

Each tool offers unique features and capabilities, making it essential to choose one that aligns with your data’s complexity and your team’s skill level.

Database Management and Querying

Mastering database management and querying is a cornerstone of effective data manipulation. Building and querying relational databases is a fundamental skill for data professionals. This involves learning SQL commands to create, query, sort, and update databases, as well as developing functions to automate these processes.

Entity Relationship Diagrams (ERDs) are crucial for planning and understanding data relationships within a database. Proper database design using ERDs ensures efficient data retrieval and management. Practicing SQL queries and exploring advanced techniques enhances one’s ability to manipulate and analyze data effectively.

Familiarity with SQL and its applications in database management is essential for data scientists. It allows for the efficient handling of tabular data and the development of robust data solutions.

Database administrators and data architects benefit greatly from strong data wrangling skills, which are necessary for maintaining secure, efficient, and reliable databases.

Customized Data Upskilling Programs

In the rapidly evolving field of data management, customized data upskilling programs are essential for staying competitive. These programs are tailored to meet the specific needs of individuals and organizations, ensuring that the skills acquired are directly applicable to their unique data challenges.

Web Age Solutions offers a range of upskilling and reskilling opportunities, including certification courses and live virtual classroom training. Their courses are designed to be flexible, with options like prepaid training credit and guaranteed to run courses, accommodating various learning styles and schedules.

By investing in customized training, professionals can master the necessary tools and techniques to effectively manipulate and analyze data, thereby enhancing their career prospects and contributing to their organization’s success.

For those seeking to refine their data skills, Web Age Solutions provides a comprehensive course catalog. Participants can learn to craft compelling data visualizations and manage databases such as Oracle, MongoDB, and Snowflake. If the existing courses do not align perfectly with your needs, Web Age Solutions is ready to create a bespoke data upskilling program just for you.

Ensuring Scalability in Data Processes

As organizations expand, the ability to scale data processes becomes critical to handle the increasing volume of data. Effective scalability ensures that the growth in data does not lead to a surge in errors or a decline in the quality of insights.

Scalability in data wrangling is about maintaining efficiency, reliability, and the integrity of data as the size of datasets grows.

To achieve this, businesses often rely on structured and automated tools that focus on efficiency and scalability. These tools are designed to handle large data volumes with minimal manual intervention, ensuring that data remains clean and structured for analysis or visualization. For instance, automated workflows can process data in batch or real-time, which is essential for applications that require immediate insights, such as fraud detection or customer feedback analysis.

Implementing in-place operations is a delicate task that involves balancing the modification of original data with the preservation of its integrity. Here are some common techniques:

- Batch processing for large datasets

- Real-time analytics for immediate insights

- Ensuring data quality and governance

The advanced analytics and machine learning models thrive on structured, clean data. Data wrangling plays a pivotal role in transforming raw data into a format that is readily usable by these models, leading to more accurate and insightful outcomes.

Conclusion

Mastering the art of data manipulation is an ongoing journey that requires a deep understanding of both the theoretical concepts and practical tools. From the powerful DataFrame and Series objects in Pandas to the diverse range of data wrangling tools, we’ve explored techniques that enable efficient data organization, analysis, and interpretation. It’s essential to assess your needs, understand the volume and complexity of your data, and choose the right tools that fit your skill level and project requirements. Remember, the key to effective data management lies in continuous learning and practice. Utilize online courses, documentation, forums, and real-world datasets to refine your skills. As your organization grows, these practices will scale, helping you manage larger datasets with precision. Embrace the challenges of data manipulation and transfer, and develop your SQL proficiency to unlock the full potential of your data.

Frequently Asked Questions

What is data manipulation with Pandas and why is it important?

Data manipulation with Pandas involves using its DataFrame and Series objects to handle and analyze data efficiently. It’s important because it allows for effective organization, analysis, and insight discovery within datasets.

How do I assess my data wrangling needs?

Assess data volume and complexity, identify data sources, decide between automated or bespoke solutions, and consider the skill level and system compatibility to determine your data wrangling requirements.

What are some common data wrangling tools?

Data wrangling tools range from spreadsheet applications to advanced data science platforms. They include Excel, SQL databases, data manipulation software, and data visualization tools like Power BI and Tableau.

What are some best practices for learning data manipulation?

Best practices include taking tutorials and online courses, utilizing documentation and community forums, and practicing with real data to apply the concepts and operations learned in a practical context.

How can I ensure scalability in my data processes?

To ensure scalability, adopt data wrangling practices and tools that can handle increasing volumes of data efficiently, without compromising on the quality of insights or increasing errors.

Where can I find resources for mastering data and database management?

You can find resources through online course catalogs offering training in data visualization tools, database management, and querying. Customized data upskilling programs are also available to meet specific needs.