Table of Contents

Data warehousing is a critical facet of modern business intelligence, providing a structured environment for aggregating, storing, and analyzing vast amounts of data from various sources. As businesses increasingly rely on data-driven decision-making, understanding the essentials of data warehousing becomes indispensable. This article delves into the core aspects of data warehousing, from its fundamental principles to advanced concepts and real-world applications, equipping professionals with the knowledge to master data management within their organizations.

Key Takeaways

- Data warehousing is essential for aggregating and analyzing data from multiple sources, enabling informed business decisions.

- Understanding different architectural strategies, including traditional and lakehouse architectures, is key to effective data management.

- AWS provides a comprehensive suite of data services, with Amazon Redshift being a pivotal solution for data warehousing needs.

- Advanced data warehousing concepts such as ETL processes, data governance, and performance tuning are crucial for optimizing data workflows.

- Real-world case studies demonstrate the transformative impact of data warehousing across various industries and highlight future trends.

Understanding Data Warehousing Fundamentals

Defining Data Warehousing and Its Importance

Data warehousing is a critical component in the realm of data management, serving as a centralized repository for data collected from various sources. Its importance lies in the ability to aggregate and store vast amounts of structured and unstructured data, enabling comprehensive analysis and informed decision-making.

- Data warehousing facilitates the integration of data from multiple sources, ensuring consistency and accuracy.

- It supports historical data storage, allowing for trend analysis over time.

- The structured environment of a data warehouse enhances query performance and simplifies reporting.

Data warehousing is not just about storage; it’s about turning data into a strategic asset that can drive business intelligence and analytics. By providing a unified view of an organization’s data, it lays the foundation for extracting actionable insights that can lead to competitive advantages.

The evolution of data warehousing has led to the emergence of the lakehouse concept, which combines the strengths of data lakes and warehouses. This approach streamlines architectures and reduces costs while maintaining the flexibility and performance required for modern multi-cloud environments.

Components of a Data Warehouse

A data warehouse is a centralized repository that stores integrated data from multiple sources. Data warehouses are designed to support decision making by providing a stable platform for data analysis and reporting. The core components of a data warehouse include:

- Data Warehouse Database: The central component where data is stored after being cleaned and processed.

- Sourcing, Acquisition, Cleanup, and Transformation Tools (ETL): These tools are responsible for extracting data from source systems, transforming it into a consistent format, and loading it into the data warehouse.

- Metadata: This refers to data about the data, which helps in understanding and managing the data warehouse’s contents.

- Query Tools: These are used to retrieve and analyze data from the data warehouse.

- Data Marts: Subsets of the data warehouse, often oriented towards a specific business line or team.

A well-structured data warehouse is pivotal for efficient data retrieval and analysis, ensuring that businesses can leverage their data to gain competitive advantages.

Each component plays a crucial role in the overall functionality and efficiency of a data warehouse. Without proper sourcing and ETL processes, data cannot be effectively integrated. Metadata is essential for maintaining the data’s context and lineage, while query tools and data marts facilitate targeted data exploration and reporting.

Data Warehousing and Business Intelligence (BI)

Data Warehousing serves as the backbone for Business Intelligence (BI) by providing a centralized repository for data collected from various sources. The integration of Data Warehousing and BI tools enables organizations to transform raw data into actionable insights. This synergy is crucial for making informed decisions that can drive business growth and efficiency.

- Data Warehousing centralizes data storage.

- BI tools analyze and report on data.

- Together, they support data-driven decision-making.

The lakehouse architecture is emerging as a solution that combines the benefits of data lakes and warehouses. It simplifies the data management landscape by unifying data storage and analysis, which can lead to cost reductions and increased agility in BI processes.

The relationship between Data Warehousing and BI is further exemplified by the evolving needs of data engineers. As workflows change, the ability to integrate warehousing with data lakes becomes more significant. This integration supports a more flexible and high-performance multi-cloud architecture, which is essential for modern data engineering.

Architectural Strategies for Data Warehousing

Traditional vs. Lakehouse Architecture

The evolution of data storage and management has led to the emergence of data lakehouses, which blend the flexibility of data lakes with the structure and reliability of traditional data warehouses. Data lakes, known for their ability to store massive volumes of data in various formats, offer modern, versatile, and cost-effective solutions. They separate storage and compute, allowing organizations to scale each independently and save on costs.

In contrast, traditional data warehouses provide highly structured environments optimized for transactional data and consistent performance. However, they often come with higher costs and less flexibility in handling unstructured data. Lakehouse architecture aims to offer the best of both worlds, supporting not only structured analytics but also advanced data science and machine learning workloads.

The lakehouse paradigm is increasingly adopted for its ability to accommodate diverse data types and use cases, while also simplifying data governance and management.

While each architecture has its merits, the choice between a traditional data warehouse, a data lake, or a lakehouse depends on the specific needs and strategy of an organization. Below is a comparison of key attributes:

- Data Warehouse: Structured, transactional, performance-optimized

- Data Lake: Unstructured/raw, scalable, cost-efficient

- Lakehouse: Hybrid, versatile, governance-friendly

Data Management and Integration Techniques

In the realm of data warehousing, data management and integration are pivotal for consolidating disparate data sources into a cohesive and accessible repository. Data integration involves the blending of data from various origins, such as transaction systems, external data sources, and cloud-based platforms, to create a unified view that can be leveraged for analytics and business intelligence.

Effective data integration techniques are essential for ensuring data quality and integrity. These techniques include:

- Data virtualization, which provides a real-time or near-real-time view of data from multiple sources without the need to replicate it.

- ETL processes, which involve extracting data from source systems, transforming it into a suitable format, and loading it into the data warehouse.

- Data governance initiatives, which establish policies and procedures to manage data access, usage, and security.

By optimizing data schemas and considering scalability, organizations can handle large volumes of data efficiently, maintaining performance even as data grows.

The table below summarizes key aspects of data management and integration:

| Technique | Description | Benefits |

|---|---|---|

| Data Virtualization | Real-time data aggregation | Reduces replication |

| ETL Processes | Structured data transformation | Enhances data quality |

| Data Governance | Policy and procedure management | Ensures data security |

Optimizing for Performance and Scalability

Optimizing a data warehouse for performance and scalability is crucial for handling large volumes of data and complex queries. Proper resource allocation and query optimization are key factors in achieving a responsive and efficient system. Here are some strategies to consider:

- Resource Allocation: Assigning adequate resources to different tasks ensures that the data warehouse can handle the workload. This includes CPU, memory, and storage considerations.

- Query Optimization: Writing efficient queries can significantly reduce the time it takes to retrieve data. This involves using indexes, partitioning data, and avoiding unnecessary computations.

- Scalability Planning: Anticipating future growth and designing the system to easily scale up or down is essential for long-term success.

By focusing on these areas, administrators can ensure that the data warehouse remains performant and scalable, even as demands increase.

Additionally, it’s important to monitor the system regularly and adjust configurations as needed. Tools and features such as Delta Live Tables and Unity Catalog can aid in efficient data management. Collaboration between administrators, project managers, and users is also critical to optimizing performance.

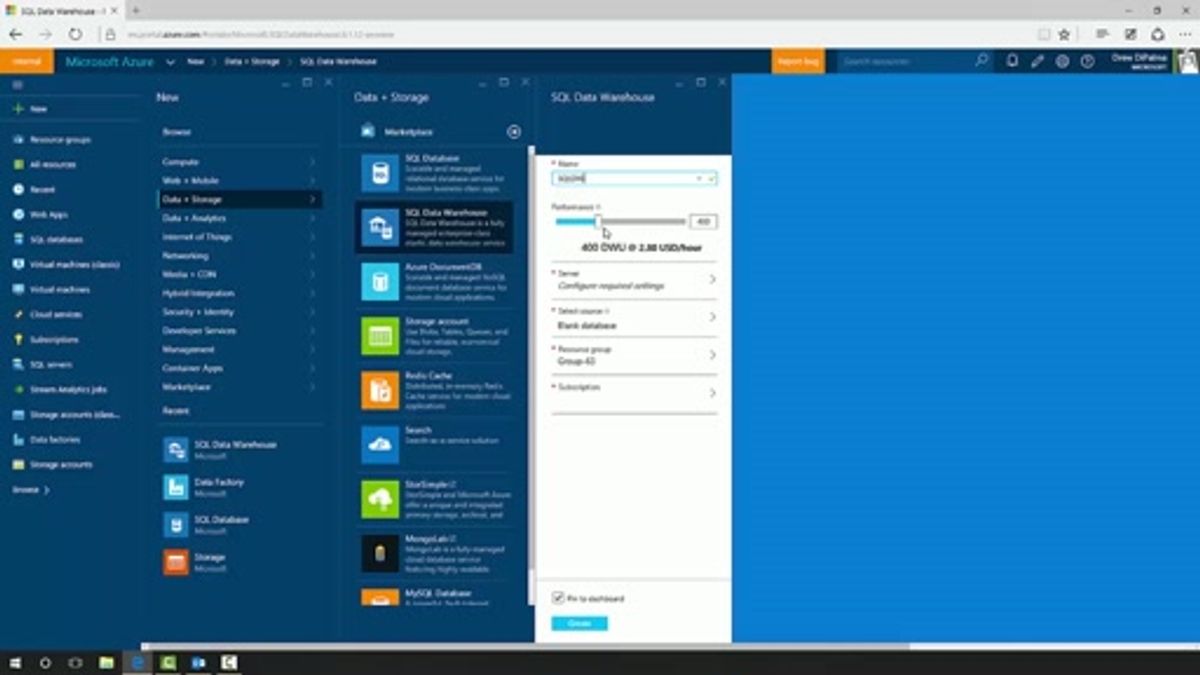

Implementing Data Warehouses with AWS

Overview of AWS Data Services

Amazon Web Services (AWS) provides a comprehensive suite of cloud-based data services that cater to various aspects of data warehousing. AWS’s global infrastructure ensures that data management and analytics can be performed at scale, offering a range of products from compute and storage to databases and analytics tools. Users can leverage these services to build robust, secure, and scalable data warehouses.

AWS’s data warehousing services are designed to be accessible to both novices and experienced professionals. For those new to AWS, the platform offers detailed documentation and tutorials to help users navigate the complexities of cloud data management. Experienced users can take advantage of AWS’s advanced features to optimize their data warehousing solutions for better performance and cost efficiency.

- Explore AWS Services for Data Warehousing

- Manage IAM users and groups

- Operate AWS S3 and understand storage classes

- Utilize Amazon Redshift for data warehousing needs

AWS’s commitment to security is evident in its Identity and Access Management (IAM) service, which allows for granular control over resources and user permissions. By understanding and applying IAM policies and roles, users can ensure that their data warehouse is not only powerful but also secure.

Setting Up and Managing Amazon Redshift

Setting up and managing Amazon Redshift involves a series of steps that ensure a secure, efficient, and scalable data warehousing environment. Amazon Redshift provides a robust platform for large-scale data analysis and warehousing, with features that support complex queries and vast storage capabilities.

- Begin by configuring AWS Secrets Manager to handle credentials securely.

- Create a Key Management Service (KMS) for Redshift Serverless to manage encryption keys.

- Understand and set up IAM Roles for Redshift Query Editor v2 to control access permissions.

The process of setting up Amazon Redshift is designed to be straightforward, allowing users to focus on extracting value from their data rather than on the complexities of data warehouse management.

Once configured, the management of Amazon Redshift includes monitoring performance, scaling resources as needed, and optimizing query execution. It’s crucial to regularly review and adjust configurations to align with changing data workloads and business requirements.

Security and Access Management in AWS

Ensuring the security and proper access management within AWS is critical for safeguarding data in the cloud. AWS provides a comprehensive suite of tools designed to address security, encryption, secrets management, auditing, privacy and compliance. One of the core services for managing access is AWS Identity and Access Management (IAM), which allows for granular control over who can do what within your AWS environment.

To effectively manage access, it’s essential to understand IAM users, groups, policies, and roles. Here’s a brief overview of each:

- IAM Users: Individuals with access to the AWS Management Console or API.

- IAM Groups: A collection of IAM users, managed by a set of policies.

- IAM Policies: Documents that define permissions and can be attached to users, groups, or roles.

- IAM Roles: A way to delegate permissions that do not require sharing security credentials.

AWS also offers tools like AWS Control Tower for simplifying multi-account management, ensuring that security configurations are consistent across your organization’s AWS accounts. Additionally, the Security Analysis Tool can help evaluate and improve these configurations, tailoring security solutions to your organization’s specific needs.

In the context of data warehousing, robust security and access management are non-negotiable. A centralized Role-Based Access Control (RBAC) model, informed by data classification, can streamline access management while ensuring data security and compliance.

Advanced Data Warehousing Concepts

ETL Processes and Data Transformation

ETL, an acronym for Extract, Transform, Load, is a cornerstone of data warehousing. It’s the structured process that involves the extraction of data from various sources, its transformation into a format suitable for analysis, and finally, the loading of this processed data into a data warehouse.

- Extract: The first step involves pulling data from heterogeneous sources.

- Transform: During this phase, data is cleansed, aggregated, and enriched to ensure its quality and usability.

- Load: The transformed data is then loaded into the data warehouse, ready for analysis.

ETL is not just about moving data; it’s about ensuring data quality and preparing it for insightful analysis.

Reverse ETL, a newer concept, takes this a step further by syncing data from the data warehouse back to operational systems, enabling real-time, actionable insights. This process is crucial for businesses that aim to be data-driven and agile in their decision-making.

Data Governance and Compliance

Effective data governance is vital for maintaining the quality and security of data within an organization. It involves a set of standards and policies that ensure data is used appropriately and remains reliable. This is particularly important in light of stringent data privacy regulations like GDPR and CCPA, which demand rigorous compliance efforts.

Key to data governance is the establishment of common data definitions and formats, which promote consistency and facilitate data sharing across various business systems. Moreover, governance practices are not just about adhering to legal standards; they also support operational optimization and informed business decision-making.

Data governance should be seen as a strategic initiative, with clear business benefits guiding its implementation from the outset.

Below are some best practices for data governance:

- Establish clear policies and standards for data use.

- Develop a framework for data quality and integrity.

- Ensure regular training and awareness for all stakeholders.

- Implement robust access controls and security measures.

- Regularly review and update governance policies to stay compliant with evolving regulations.

Monitoring and Performance Tuning

Effective monitoring and performance tuning are crucial for maintaining the efficiency and reliability of a data warehouse. By regularly assessing system performance and identifying bottlenecks, administrators can implement targeted improvements to enhance overall functionality.

- Configure buffer pools and caches: Proper configuration can significantly improve database performance, especially in high-traffic scenarios.

- Utilize advanced monitoring tools: Tools like New Relic provide in-depth insights into system performance, aiding in the detection and resolution of issues.

- Database tuning techniques: Regular tuning of the database can lead to optimized query performance and resource utilization.

Collaboration between administrators, project managers, and users is critical to the success of performance tuning efforts. Understanding the impact of workload concurrency and monitoring individual queries can lead to substantial improvements in data processing efficiency.

Understanding the administration and optimization of resources is not just a technical challenge but also a strategic one. It involves aligning performance goals with business objectives, ensuring that the data warehouse can support decision-making processes effectively.

Real-World Applications and Case Studies

Case Studies: Success Stories of Data Warehousing

The evolution of data warehousing has been marked by the integration of traditional BI workflows with modern data lakes, leading to the emergence of the lakehouse architecture. Case studies across various industries have demonstrated the efficacy of this approach, highlighting its role in simplifying architectures and reducing costs. For instance, the use of Databricks SQL has enabled data engineers to create flexible, high-performance multi-cloud architectures that are both cost-effective and scalable.

The transition to a lakehouse architecture is not just a technical upgrade but a strategic move that aligns with the growing complexity of data and the need for agile, insight-driven decision-making.

Several organizations have documented their journey from fragmented data management to strategic data enablement. These narratives often share a common theme: the transformative impact of a unified data warehousing solution on business intelligence and analytics. Below is a list of key outcomes reported by enterprises that have successfully implemented data warehousing solutions:

- Enhanced data governance and compliance

- Streamlined ETL processes and data transformation

- Improved monitoring and performance tuning

- Increased agility in deriving actionable insights from raw data

These success stories serve as a testament to the indispensable role of data warehousing in the modern data landscape, providing a practical guide for organizations looking to harness the full potential of their data assets.

Data Warehousing in Different Industries

Data warehousing has become a cornerstone across various industries, enabling organizations to harness the power of their data for strategic decision-making. The adaptability of data warehousing solutions allows for tailored applications that meet the unique needs of each sector. For instance, in healthcare, data warehouses are instrumental in patient data management and in improving treatment outcomes. In retail, they facilitate enhanced customer segmentation and personalized marketing strategies.

- Healthcare: Improved patient data management and treatment outcomes

- Retail: Enhanced customer segmentation and personalized marketing

- Finance: Risk assessment and fraud detection

- Manufacturing: Supply chain optimization and quality control

The integration of data warehousing with other data management systems, such as data lakes, has led to the emergence of the lakehouse architecture. This hybrid model combines the structured storage and processing capabilities of data warehouses with the vast data handling capacity of data lakes.

The versatility of data warehousing is further exemplified by the various case studies that showcase its effectiveness in improving customer service and operational efficiency. Data mining, a key component of data warehousing, plays a crucial role in gathering accurate customer data to offer better service. As industries continue to evolve, the role of data warehousing in driving innovation and competitive advantage becomes increasingly significant.

Future Trends in Data Warehousing and Analytics

As the landscape of data management continues to evolve, data warehousing is becoming increasingly integral to the field of data engineering. The emergence of the lakehouse architecture represents a significant trend, blending the scalability of data lakes with the management features of traditional data warehouses. This hybrid model is gaining traction for its ability to streamline workflows and reduce operational costs.

The role of machine learning and AI in data warehousing is also expanding, with automation playing a key role in data transformation and integration. These advancements are not only enhancing the efficiency of data processes but are also paving the way for more sophisticated analytics and insights.

The future of data warehousing is characterized by a continuous integration of new technologies and methodologies aimed at optimizing data utility and business intelligence.

With the increasing complexity of data, resources such as the ‘Big Book of Data Warehousing and BI’ and platforms like TDWI offer valuable guidance on navigating the evolving data landscape. They provide practical examples, best practices, and case studies that are essential for professionals looking to stay ahead in the field.

Conclusion

In the journey to mastering data management, data warehousing stands as a pivotal component, integrating diverse data sources into a centralized repository for advanced analytics and business intelligence. This article has explored the essentials of data warehousing, from the foundational architecture to the practical implementation in environments like Amazon Redshift. We’ve seen how the lakehouse architecture is revolutionizing the field by unifying data lakes and warehouses, thereby simplifying data strategies and reducing costs. As we move forward in an era where data is the lifeblood of decision-making, the insights and skills gained from understanding data warehousing are invaluable. Whether you’re a data professional, project manager, or business analyst, the knowledge of how to efficiently collect, manage, and analyze data will significantly contribute to the optimization of business operations and the creation of actionable insights.

Frequently Asked Questions

What is data warehousing and why is it important?

Data warehousing is the process of collecting, storing, and managing large volumes of data from various sources in a centralized repository. Its importance lies in its ability to enable efficient data analysis, business intelligence, and decision making by providing a consolidated view of enterprise data.

What are the key components of a data warehouse?

The key components of a data warehouse include a database to store data, ETL (Extract, Transform, Load) tools for data processing, metadata management for data definition and tracking, and front-end tools for querying and reporting.

How do data warehousing and business intelligence relate?

Data warehousing provides the foundational infrastructure for business intelligence (BI) by organizing and storing data in a way that is accessible and manageable. BI tools leverage this data to generate insights, reports, and analytics that support business decisions.

What is Amazon Redshift and how is it used in data warehousing?

Amazon Redshift is a fully managed, petabyte-scale data warehouse service provided by AWS. It allows users to efficiently store and analyze large volumes of data using SQL-based tools and integrates with various AWS services for data management and analytics.

What are the benefits of using a lakehouse architecture for data warehousing?

A lakehouse architecture combines elements of data lakes and data warehouses, offering the scalability and flexibility of a data lake with the management and structured query capabilities of a data warehouse. This architecture simplifies data management and can reduce costs.

How do ETL processes contribute to data warehousing?

ETL processes are critical to data warehousing as they involve extracting data from various sources, transforming it into a format suitable for analysis, and loading it into the data warehouse. These processes ensure data is accurate, consistent, and ready for analysis.