Table of Contents

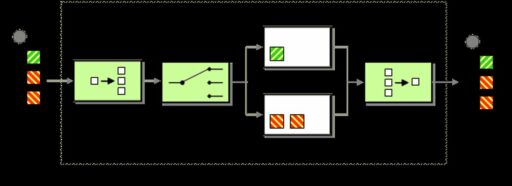

Data mapping is a critical process that involves translating data elements from one database system to another, ensuring that data sent from one source to the target is translated correctly. Mastering data mapping techniques is essential for data analysts, data scientists, and IT professionals who work with data integration and transformation. In this article, we will explore 5 essential techniques for mastering data mapping, which are fundamental to manipulating and preparing data for insightful analysis and decision-making.

Key Takeaways

- Data Smoothing helps in reducing noise and anomalies in data, leading to more accurate representations for analysis.

- Attribution Construction involves creating new data attributes or features that can provide additional insights into the data set.

- Data Generalization abstracts data by replacing low-level details with high-level concepts, making it easier to understand and work with.

- Data Aggregation is the process of summarizing and combining data to view it in a simpler form, often for statistical analysis.

- Data Normalization standardizes data formats and scales, making different data sets comparable and combinable.

1. Data Smoothing

Data smoothing is a critical preprocessing step in data analysis, where algorithms are applied to reduce noise and enhance the visibility of trends in a dataset. Noise can obscure valuable insights and patterns, making it essential to apply smoothing techniques for clearer data interpretation.

The three primary algorithm types used for data smoothing include:

- Clustering: Groups similar values to form clusters, identifying outliers.

- Binning: Divides data into bins, smoothing values within each bin.

- Regression: Establishes relationships between dependent attributes for prediction.

By effectively applying data smoothing techniques, analysts can mitigate the adverse effects of noise and achieve a more accurate representation of the underlying data trends.

Understanding and selecting the right algorithm for your data is crucial. Each technique has its own merits and is best suited for different types of data challenges. For instance, clustering is ideal for categorical data, while binning and regression are more suited for numerical data sets.

2. Attribution Construction

Attribution Construction is a critical step in data mapping that involves defining and assigning relevant attributes to data elements. This process ensures that each data point is accurately represented and categorized, facilitating better analysis and decision-making.

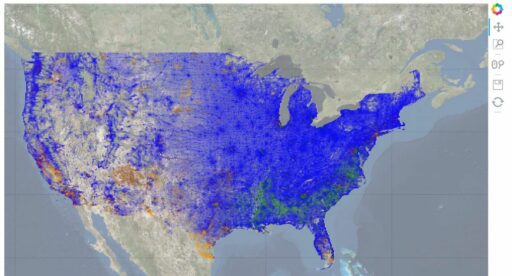

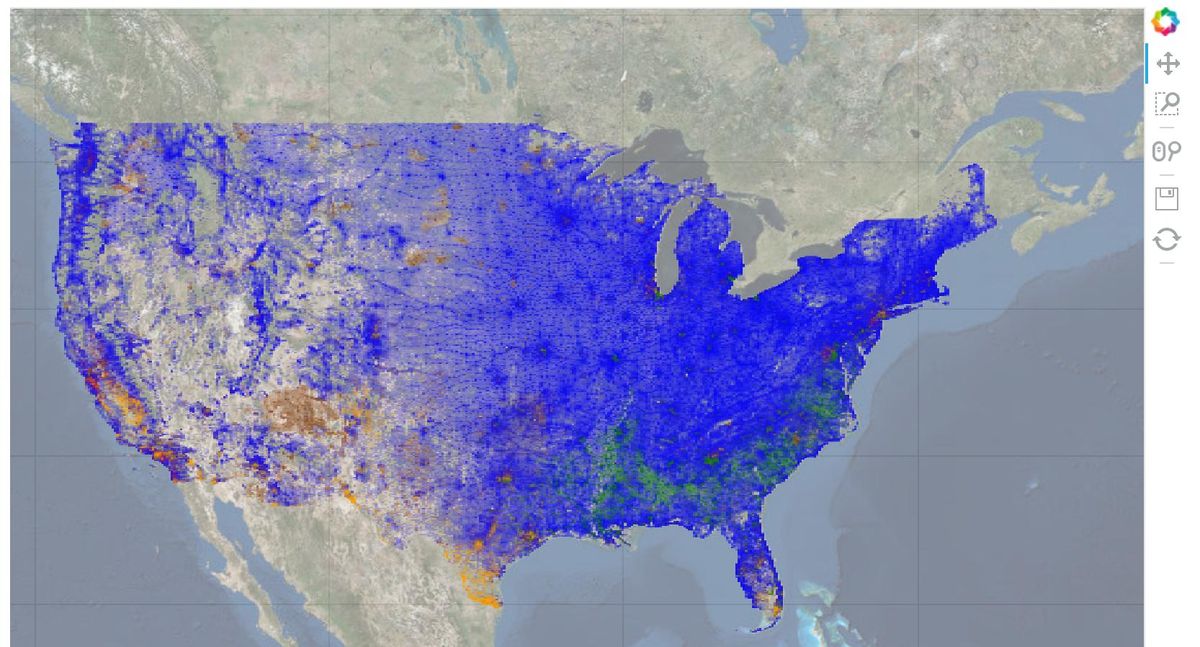

- Detail and Specificity: Attributes must be detailed enough to match the purpose of the analysis, such as distinguishing between different types of urban land uses.

- Contextual Understanding: It’s important to recognize that the same attribute, like ‘forest’, can mean different vegetation types in different regions, affecting the accuracy of the data mapping.

- Adaptability: As remote sensing technology advances, attribution techniques must also adapt to accurately reflect new data types and features.

Attribution Construction not only enhances the precision of data representation but also plays a vital role in the subsequent steps of data analysis, such as feature engineering and model training.

3. Data Generalization

Data Generalization is a crucial technique in the realm of data mapping, where low-level attributes are transformed into high-level categories. This process simplifies complex data sets by abstracting details and focusing on broader patterns, making it easier to understand and analyze the data.

For instance, consider a data set that includes specific dates of customer purchases. Data generalization might involve grouping these dates into months or quarters, providing a more manageable overview of purchasing trends over time. Here’s how the data might look before and after generalization:

| Original Data | Generalized Data |

|---|---|

| 2023-01-15 | January 2023 |

| 2023-01-20 | January 2023 |

| 2023-02-05 | February 2023 |

By abstracting data to a higher level, we can reveal patterns that are not apparent when examining individual records.

It’s important to maintain consistency in the level of detail throughout the data set. Inconsistent generalization can lead to confusion and undermine the reliability of the data. Effective generalization hinges on sound judgment and a thorough understanding of the data’s context.

4. Data Aggregation

Data aggregation is a pivotal technique in data transformation, often used to store and present data in a summarized format. It is particularly useful for statistical analysis, where data may be aggregated over specific time periods to provide key metrics such as average, sum, minimum, and maximum.

For instance, consider a dataset with the following attributes:

- City

- Street

- Country

- State/province

By defining a hierarchy (street < city < state/province < country), we can aggregate data at various levels to gain insights appropriate to different contexts.

Aggregated data not only simplifies complex datasets but also aids in revealing trends and patterns that might not be apparent in raw data.

When aggregating data, it’s crucial to ensure that the process aligns with the intended analysis goals. Improper aggregation can lead to misleading conclusions, hence the need for a thoughtful approach to this technique.

5. Data Normalization

Data normalization is a pivotal step in data preprocessing that involves scaling data to a smaller range to enhance algorithm efficiency and data extraction performance. It is crucial for minimizing duplication and preserving the integrity of the data. This technique is not only about maintaining data consistency; it also plays a significant role in optimizing database performance.

There are several methods to normalize data, each with its specific use case. For instance:

- Min-max normalization

- Z-score normalization

- Decimal scaling

Data normalization ensures that each data point is given equal weight in the analytical process, thereby preventing skewed results and enabling more accurate data analysis.

While normalization is aimed at reducing redundancy and ensuring data dependency, it’s important to strike a balance. Both over-normalization and under-normalization can introduce issues, making it essential to apply the right level of normalization to your data set.

Conclusion

In conclusion, mastering data mapping is a multifaceted skill that requires a deep understanding of various data transformation techniques. Throughout this article, we have explored five essential techniques that are indispensable for anyone looking to excel in data mapping. From the nuances of data smoothing and generalization to the intricacies of map production and data normalization, each technique offers unique benefits and challenges. It is crucial to evaluate your data and project goals to select the most appropriate methods. Remember, the right combination of these techniques can significantly enhance the quality of your analysis and the clarity of your data visualizations. As the field of data mapping continues to evolve, staying informed about the latest tools and practices will ensure that your data mapping skills remain sharp and effective.

Frequently Asked Questions

What are the 6 basic data transformation techniques?

The 6 basic data transformation techniques include Data Smoothing, Attribution Construction, Data Generalization, Data Aggregation, Data Discretization, and Data Normalization.

How do I choose the best data transformation technique for my project?

To choose the best data transformation technique, evaluate what your raw dataset requires and what each technique can offer. Consider the goals of your analysis and the nature of your data to determine the most suitable method.

What is the difference between data cleansing and data transformation?

Data cleansing involves correcting or removing erroneous, incomplete, or irrelevant data, while data transformation involves converting data from one format or structure to another to make it more suitable for analysis.

Are there any other ways to transform data besides the standard techniques?

Yes, in addition to the standard techniques, other methods such as Data Integration, Data Blending, and Data Manipulation can also be used to transform data for analysis.

What is the role of normalization in data mapping?

Normalization is used to adjust values in a dataset that skew the data, making it difficult to extract valuable insights. It brings data to a common scale without distorting differences in the ranges of values.

What are the final steps in map preparation?

The final steps in map preparation include fair drawing by a skilled cartographer, reproduction through creating negatives from the originals, and possibly separating into several drawings for multicoloured maps.