Table of Contents

In the ever-evolving world of Big Data, data modeling stands as a critical process for structuring and understanding complex datasets. This essential guide to data models provides a deep dive into the principles, techniques, and strategies that form the backbone of effective data management. Whether you’re a beginner or a seasoned data professional, this guide will equip you with the knowledge to unlock the potential of your data, drive insightful analytics, and make informed decisions.

Key Takeaways

- Data modeling is a foundational skill for data professionals, crucial for organizing data and extracting meaningful insights.

- A strong understanding of data modeling basics paves the way for advanced analytics and efficient data management strategies.

- Effective data modeling techniques can significantly enhance business efficiency by revealing hidden patterns and trends.

- The synergy between data modeling and analytics leads to better decision-making and a competitive edge in the marketplace.

- As data volumes grow, mastering data modeling and transformation techniques is essential for unlocking the full potential of Big Data.

Essential Principles of Data Modeling: Building a Strong Foundation

Understanding the Key Concepts of Data Modeling

Data modeling is the cornerstone of effective data management, serving as a blueprint for how data is structured and utilized. It is the process of creating a visual representation of data to define its structure, relationships, and constraints. The primary goal is to mirror real-world entities and their interactions, thereby simplifying data management, analysis, and usage.

There are three main types of data models that reflect different levels of abstraction:

- Conceptual Data Model: High-level overview focusing on entities and their relationships.

- Logical Data Model: Details data elements and relationships without technical specifics.

- Physical Data Model: Specifies the actual storage details, including table structures and data types.

Data modeling is not just a technical necessity but a strategic tool that enables organizations to navigate the complexities of Big Data. By fostering a clear understanding of data structures, it lays the groundwork for data engineering excellence, ensuring quality, scalability, and agility in a data-driven world.

Key components of data modeling include entities, attributes, and relationships. Entities represent real-world objects or concepts, such as customers or products. Attributes are the properties or details of these entities, and relationships define how entities are connected to one another. Understanding these components is essential for developing a robust data model that can adapt to the evolving needs of a business.

The Architecture of Data Modeling: Understanding the Building Blocks

At the heart of data modeling lies the architecture that holds it all together. Understanding the components that form the architecture is essential for anyone looking to master data management. The architecture of data modeling is typically divided into three key types of models: Conceptual, Logical, and Physical.

-

Conceptual Data Model: This high-level model outlines the system’s scope, often using business terms. It focuses on the organization of data without getting into the details of implementation.

-

Logical Data Model: This model provides a more detailed view, showing the structure of data without being tied to any specific technology or data storage system.

-

Physical Data Model: This specifies how data will be stored in a particular database or storage system, including details like table structures, data types, and indexes.

Each of these models serves a unique purpose and is used at different stages of the data modeling process. Together, they provide a comprehensive framework for understanding and working with data.

The key to effective data modeling is not just in knowing the different types of models but in understanding how they interconnect to reflect the real-world scenarios they are designed to represent.

Data Modeling Basics: A Comprehensive Guide for Beginners

Data modeling is the process of creating a data model for the data to be stored in a database. This structural representation allows for the efficient organization, storage, and retrieval of data. Understanding the key concepts of data modeling is crucial for anyone looking to master the art of data management.

When beginning with data modeling, it’s important to familiarize oneself with the various types of data models:

- Conceptual Data Models: High-level, business-focused models that outline entities and relationships.

- Logical Data Models: Define the structure of the data elements and set the relationships between them.

- Physical Data Models: Specify how the model will be implemented within the database system.

By starting with a conceptual model and progressively refining it into a logical and then a physical model, you can ensure that your data architecture is both robust and adaptable to change.

Data modeling tools and methodologies vary, but they all aim to simplify the process of defining and managing data requirements. The right tools can empower your data management strategy, leading to better data quality and more insightful analytics. As you delve into data modeling, remember that it’s not just about the technical aspects; it’s also about understanding how data flows within your organization and how it can be leveraged for business intelligence.

The Synergy Between Data Modeling and Analytics: A Winning Combination

Leveraging Data Modeling Analytics: Maximizing the Value of Your Data

To truly maximize the value of your data, it’s imperative to leverage data modeling analytics effectively. Optimizing data models is not just about organization; it’s about unlocking powerful insights that drive decision-making. By understanding the intricacies of tables, relationships, cardinality, and cross-filter direction, you can build robust models that serve as the backbone for advanced analytics.

The synergy between data modeling and analytics is the cornerstone of a data-driven strategy. It transforms raw data into a structured format that analytics can exploit to reveal hidden patterns and trends.

Here are some emerging trends in data modeling analytics:

- AI-Driven Data Modeling: Utilizing AI and machine learning for automated data modeling processes.

- Graph Data Modeling: Employing graph databases to handle complex relationships in large datasets.

- Streaming Data Modeling: Developing models capable of processing continuous data streams.

- Explainable AI in Data Modeling: Striving for transparency in AI-generated data models.

These trends highlight the evolving nature of data modeling analytics and its critical role in enhancing business efficiency. By staying abreast of these developments, organizations can ensure they are not just collecting data, but transforming it into a strategic asset.

Data Modeling Analysis: Unveiling Insights Hidden in Your Data

Data modeling analysis is the cornerstone of uncovering the valuable insights that lie dormant within your datasets. By conceptualizing and visualizing data objects and their inter-relationships, we can create a simplified and logical database structure that is primed for analysis.

The true power of data modeling lies in its ability to transform raw data into a structured form that analytics tools can easily interpret.

Understanding the key components of your data model is crucial. It involves recognizing tables, relationships, cardinality, and the direction of cross-filtering. These elements work in synergy to build a robust model that not only represents the data accurately but also enhances performance and user experience.

Here are some advanced techniques that are shaping the future of data modeling analysis:

- AI-Driven Data Modeling: Utilizing AI and ML for automated data modeling processes.

- Graph Data Modeling: Employing graph databases to model complex relationships in Big Data.

- Streaming Data Modeling: Developing models capable of handling continuous data streams.

- Explainable AI in Data Modeling: Ensuring AI-driven models are transparent and interpretable.

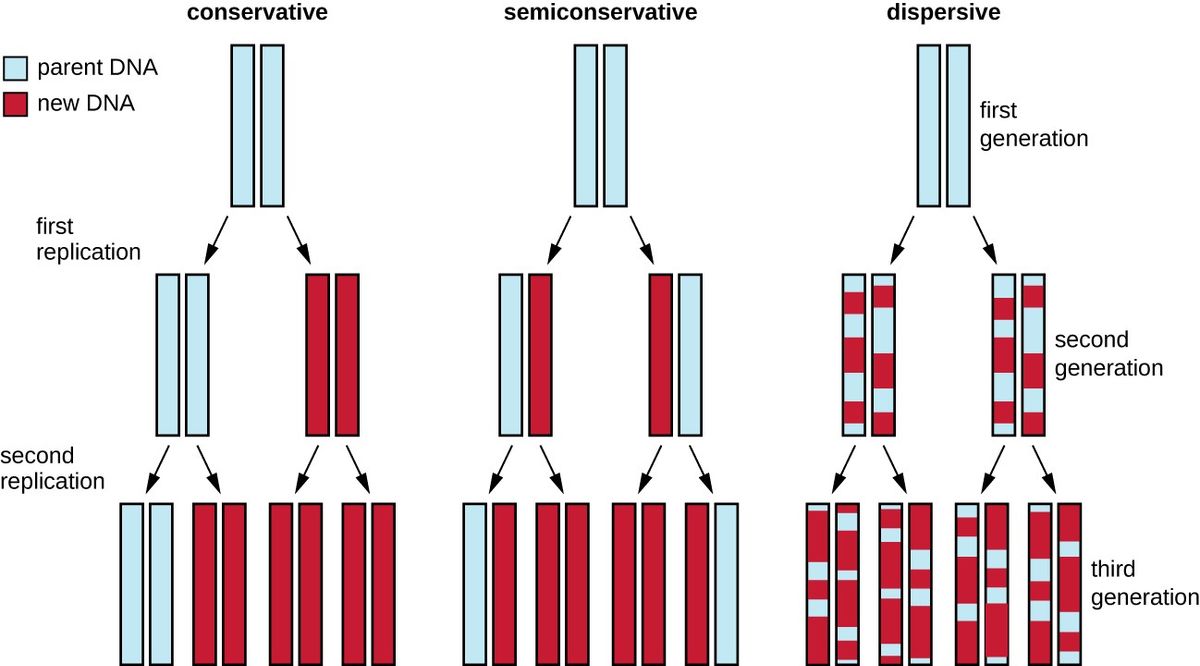

Exploring Data Modeling Algorithms: How They Enhance Business Efficiency

In the realm of data science and analytics, data modeling algorithms stand as critical tools for uncovering efficiencies and streamlining business processes. These algorithms transform raw data into a structured form, making it easier to identify patterns, trends, and relationships that can drive strategic decisions.

Data modeling algorithms are not just about organizing data; they are about revealing the story that data tells about business operations and opportunities.

For instance, AI-driven data modeling leverages artificial intelligence to automate the creation and refinement of data models. This approach can significantly reduce the time and effort required for data preparation, allowing analysts to focus on extracting insights. Below is a list of key algorithm types enhancing business efficiency:

- AI-Driven Data Modeling: Automated and intelligent data structuring

- Graph Data Modeling: Handling complex relationships in Big Data

- Streaming Data Modeling: Techniques for real-time data streams

- Explainable AI in Data Modeling: Transparency in AI-driven models

By embracing these advanced data modeling techniques, businesses can navigate the complexities of Big Data, ensuring data quality, scalability, and agility. As the technology industry continues to evolve, the mastery of these algorithms will be indispensable for maintaining a competitive edge.

Mastering Data Modeling Techniques: A Must-Know for Every Data Professional

A Step-by-Step Tutorial on Data Modeling for Beginners

Embarking on the journey of data modeling can be daunting for beginners, but with a structured approach, it becomes an achievable task. Start by gathering business requirements, which will guide the entire modeling process. Understanding what the business needs are will inform the scope and complexity of your data model.

Next, define the business processes that your data model will need to support. This involves identifying the key operations, tasks, and workflows that the data will facilitate. Once you have a clear picture of the processes, you can begin to create a conceptual data model. This high-level representation outlines the main entities and relationships without delving into technical details.

The goal of a conceptual data model is to establish a shared vocabulary and a broad understanding of the data landscape among stakeholders.

As you progress, refine your model by adding more detail and structure. This iterative process will help you build a robust and effective data model that aligns with your business objectives.

7 Best Practices for Effective Data Modeling

Effective data modeling is a critical skill for any data professional. It lays the groundwork for robust analytics and insightful decision-making. Here are seven best practices to ensure your data models are both powerful and flexible:

- Keep Business Objectives in Mind: Always align your data model with the strategic goals of your organization.

- Properly Document Your Data Model: This includes not just the model itself, but also the reasoning behind design decisions.

- Design Your Data Model to Be Adjustable Over Time: Anticipate future changes and make sure your model can adapt.

Embracing these practices will not only streamline your data management processes but also enhance the overall quality of your data insights.

Remember, a well-constructed data model is the foundation upon which all data analysis is built. It’s not just about storing data; it’s about making that data work for you in the most efficient way possible.

Choosing the Right Data Modeling Methodology for Your Project: A Step-by-Step Analysis

Selecting the appropriate data modeling methodology is a pivotal decision that can significantly influence the success of your project. To choose the right data modeling technique, it’s crucial to identify data sources and evaluate their quality. This step involves determining where the data is coming from and assessing its accuracy and consistency.

The process of choosing a data modeling methodology should be methodical and tailored to the specific needs of your project. Consider factors such as the complexity of the data, the scalability requirements, and the intended use of the data model.

Here are some steps to guide you through the selection process:

- Define the purpose and scope of your data model.

- Identify and understand your data sources.

- Evaluate different data modeling techniques and tools.

- Consider the scalability and flexibility of the model.

- Assess the technical expertise of your team.

- Align the methodology with your project’s goals and constraints.

By following these steps, you can ensure that the methodology you choose will support your project’s objectives and provide a robust framework for your data.

The Magic of Data Transformation: Unlocking Hidden Patterns and Trends

From Chaos to Clarity: Exploring the Art of Data Transformation and Its Impact on Decision-Making

In the realm of data science, the process of transforming raw data into a structured and meaningful format is both an art and a science. Data transformation is a critical step in the journey from data collection to actionable insights. It involves cleaning, aggregating, and manipulating data to uncover patterns and trends that inform strategic decision-making.

- Identify and clean anomalies in the data

- Aggregate data from multiple sources

- Apply transformations to enhance data quality

- Analyze transformed data to uncover insights

Data transformation is not just about changing the format of data; it’s about enhancing its value and utility for better decision-making.

The synergy between data transformation and analytics is undeniable. By applying the right transformation techniques, businesses can move from a state of chaos, where data is abundant but not useful, to a state of clarity, where data becomes a powerful tool for driving business strategies and outcomes.

Unleashing the Power of Data Transformation: How to Turn Raw Information into Actionable Insights

The actual value of data lies in its ability to generate actionable intelligence. Raw data alone may not be helpful unless transformed into meaningful insights that can drive decision-making processes. By analyzing large datasets using advanced analytics tools, organizations can uncover hidden patterns or anomalies in data. This analysis can reveal valuable insights that may have gone unnoticed.

Data transformation is not just about changing the format of data; it’s about refining data into a strategic asset that informs and guides business strategies.

To effectively transform data, it’s essential to follow a structured approach:

- Identify the key data sources and ensure their quality.

- Choose the right tools and technologies for data processing.

- Apply appropriate data modeling techniques to organize and interpret the data.

- Utilize advanced analytics to extract meaningful patterns and trends.

- Translate these insights into actionable business decisions.

Each step in this process is crucial for turning raw numbers into insights that can powerfully impact business outcomes. As we delve into the world of data transformation, we see its relevance across various domains, from business intelligence to machine learning, and its potential to drive search engine success.

Beyond Numbers: Exploring the Art of Data Transformation

Data transformation is not just about changing the format or structure of data; it’s about unveiling the story that lies within. It involves manipulating, cleaning, and reorganizing data to make it more suitable for analysis, visualization, or storage. This transformation allows data to reveal patterns and insights that were previously obscured.

Data transformation is a critical step in the data lifecycle. It ensures that data is in the right form and quality for meaningful analysis.

Understanding the process of data transformation is crucial for anyone working with data. Here are some key steps involved:

- Data Cleaning: Remove inaccuracies and correct errors.

- Data Integration: Combine data from different sources.

- Data Reduction: Simplify data without losing its essence.

- Data Discretization: Convert continuous data into categorical data.

By mastering these steps, professionals can transform raw data into a strategic asset, driving better decision-making and offering a competitive edge.

Demystifying Data Warehouse Concepts: From ETL to OLAP and Beyond

Best Practices for Optimal Data Warehouse Design

Designing an optimal data warehouse is a critical step for ensuring efficient data management and retrieval. Careful planning and adherence to best practices are essential for success.

- Scalability: Plan for future growth to avoid costly overhauls.

- Performance: Optimize for query speed and data loading efficiency.

- Quality: Implement data validation and cleansing to maintain integrity.

- Security: Protect sensitive data with robust access controls and encryption.

A well-designed data warehouse not only supports current analytical needs but also adapts to evolving business requirements. It’s the backbone of informed decision-making.

Remember, the goal is to create a data warehouse that is both flexible and reliable. Regularly review and update your design to incorporate new technologies and methodologies that can enhance performance and provide competitive advantages.

Establishing Effective Data Modeling Governance: Key Strategies

Establishing a robust data modeling governance framework is essential for ensuring the integrity and utility of a data warehouse. Clear policies and procedures must be in place to maintain data quality and consistency across the organization.

- Define roles and responsibilities: Assign specific tasks to team members to ensure accountability.

- Establish data standards: Create uniform data definitions and formats to facilitate data integration.

- Implement data quality measures: Regularly review and cleanse data to maintain its accuracy.

- Enforce compliance: Adhere to legal and regulatory requirements to protect data privacy and security.

Effective governance is not just about setting rules; it’s about creating a culture of data stewardship where everyone understands the importance of maintaining high-quality data.

By focusing on these key areas, organizations can create a governance model that not only protects their data assets but also enhances the overall effectiveness of their data modeling efforts.

Introduction to Data Modeling: Understanding the Language and Methodology

Data modeling is the process of creating a visual representation of data, defining its structure, relationships, and constraints. It serves as a blueprint for both the technical and business aspects of data management, ensuring that data is organized in a way that accurately reflects real-world entities.

The language of data modeling includes various notations and symbols that are used to articulate the model’s components. These notations are essential for effective communication among stakeholders and for the accurate implementation of the data model in database systems.

Data modeling methodologies provide a systematic approach to developing data models. They guide the modeler through a series of steps to capture all necessary details about the data and its use within the organization.

Understanding these methodologies is crucial for selecting the right one for your project. Below is a list of common methodologies used in data modeling:

- Entity-Relationship (ER) Modeling

- Dimensional Modeling

- Object-Role Modeling (ORM)

- Unified Modeling Language (UML)

Each methodology has its own strengths and is suited to different types of projects. For instance, ER modeling is widely used for designing relational databases, while UML is more versatile and can be applied to a variety of software development projects.

Conclusion

As we have explored throughout this guide, data modeling is an indispensable tool in the quest to unlock the potential of data. It serves as the blueprint for organizing, managing, and analyzing information, enabling businesses to transform raw data into actionable insights. By understanding and applying the principles of data modeling, organizations can improve decision-making, optimize performance, and gain a competitive edge in today’s data-driven landscape. Whether you are a beginner or an experienced professional, mastering data modeling techniques is crucial for leveraging the full power of your data assets. As the digital universe continues to expand, the art and science of data modeling will remain a cornerstone of effective data management, ensuring that businesses can capitalize on the opportunities presented by Big Data and thrive in the digital age.

Frequently Asked Questions

What is data modeling and why is it essential for businesses?

Data modeling is the process of creating a data model for the data to be stored in a database. It is essential for businesses as it helps organize, understand, and use their data effectively, enabling better decision-making and efficient operations.

How does data modeling in Power BI enhance data analysis?

Data modeling in Power BI organizes data into tables and relationships, improving performance and user experience. It allows for insightful visualizations and informed business decisions through advanced techniques like DAX calculations and optimizing data relationships.

What are some best practices for effective data modeling?

Best practices for effective data modeling include understanding the business context, using normalization to reduce data redundancy, defining clear relationships between tables, ensuring data integrity, and regularly reviewing the model for potential improvements.

Can you explain the architecture of data modeling?

The architecture of data modeling involves the layers of abstraction from conceptual models (high-level business concepts) to logical models (more detailed, attribute-rich structures) and finally to physical models (actual database implementation).

What role does data transformation play in data modeling?

Data transformation is the process of converting raw data into a format that is more suitable for analysis. In data modeling, it helps in cleaning, structuring, and enriching data, thus revealing hidden patterns and trends that inform strategic decisions.

How does data modeling contribute to the success of Big Data projects?

Data modeling is critical for Big Data projects as it provides a structured approach to managing vast datasets, ensuring data quality and consistency, and enabling complex data analytics, which in turn leads to valuable insights and strategic advantages.