Table of Contents

In the vast digital data landscape, tabular data stands out as a critical component for organizing and interpreting information. As we delve into the world of tables, we face the challenge of not just understanding this structured data but also transforming it for advanced analytics. This article explores the various facets of tabular data analysis, from improving data quality to extracting data from PDFs using tools like Tabula-py, and looks ahead to the future of tabular data handling in the context of federated learning and self-supervised techniques.

Key Takeaways

- Tabular data is the backbone of structured digital information, essential for efficient data interpretation and analysis.

- Enhancing tabular data quality involves techniques such as data augmentation and addressing measurement errors, but requires careful consideration of the dataset’s structure.

- Tabula-py is a valuable tool for converting PDF tables into analyzable formats, though it has limitations such as potential loss of column headings during extraction.

- Experimental comparisons between Tabula-py and other extraction methods reveal insights into performance and accuracy in transforming PDF documents into structured data.

- The future of tabular data analysis is promising, with innovations like the TabFedSL framework advancing the field in federated learning environments.

Understanding Tabular Data in Digital Analysis

The Role of Tables in Structured Information

In the realm of digital data analysis, tables serve as the fundamental framework for organizing and presenting information in a structured manner. Tables facilitate the clear and concise display of data, allowing for quick comprehension and efficient analysis. They are particularly indispensable in fields where precision and clarity are paramount, such as finance and scientific research.

The transformation of raw data into a tabular format is a critical step in the data analysis process. This structured approach to data organization enables the following:

-

Identification of patterns: By arranging data in rows and columns, patterns and trends become more apparent.

-

Ease of comparison: Tables allow for the side-by-side comparison of different data sets or variables.

-

Data integrity: Structured tables help maintain data accuracy and consistency.

The challenge, however, lies in the initial transformation of raw data into a structured table. This process often requires sophisticated algorithms and tools to accurately interpret and reconstruct the data layout.

For instance, after performing Optical Character Recognition (OCR) on scanned documents, algorithms work to recreate the table structure. They detect alignment, spacing, and geometric relationships between text blocks, which are essential for identifying rows, columns, and headers. This meticulous process ensures that the data is not only readable but also analyzable.

Challenges in Raw Table Transformation

Transforming raw tables into a format suitable for advanced analytics is a complex task that often involves several intricate steps. The journey from a table in a PDF document or an image to a structured dataframe is not straightforward. It typically includes identifying the table region (detection), extracting the table text (extraction), and finally, recreating the table data into rows and columns (restructuring).

One of the primary challenges is the accurate delineation of individual cells, especially when they are closely situated or lack clear separators. Adjusting parameters such as the iou_threshold to ensure the output closely resembles the original table can be a daunting task. Moreover, the process of extracting table data must be sensitive to the nuances of the table’s design to maintain the integrity of the information.

The ultimate objective of these methods is to convert the table image into a dataframe, facilitating the answering of questions programmatically or by the Language Model (LLM).

Another significant challenge is the treatment of data quality. While data augmentation and noise addition can enhance datasets, they must be applied judiciously to avoid sub-optimizing the efficacy of the data. Analysts must be able to connect the dots between raw data and business strategies, turning insights from meaningful data into actionable decisions.

Advancements in Tabular Data Interpretation

The interpretation of tabular data has seen significant advancements, particularly with the integration of Large Language Models (LLMs). These models have revolutionized the way we approach structured data, offering new methods to extract and analyze information with greater precision.

Recent developments have focused on the adaptation of tabular data for LLMs, which has led to more sophisticated analysis techniques. For instance, the TabCSDI framework has been introduced to effectively handle categorical and numerical variables, enhancing the imputation of tabular data.

The synergy between advanced encoding techniques and LLMs has opened up new possibilities for data scientists, allowing for more nuanced interpretations and richer insights from tabular datasets.

Moreover, the performance of these models in transforming raw tables into analyzable formats has been a game-changer. The ability to programmatically conduct advanced analytics has streamlined numerous processes across various domains. Here is a brief overview of the key techniques that have been instrumental in this progress:

- One-hot encoding

- Analog bits encoding

- Feature tokenization

These techniques have been empirically evaluated and have shown to significantly outperform existing methods, emphasizing the importance of selecting the right categorical embedding techniques.

Improving Tabular Data Quality for Enhanced Analysis

Data Augmentation and Noise Addition Techniques

In the realm of tabular data analysis, data augmentation is a pivotal technique for enhancing the robustness and generalizability of models. By introducing various types of noise, such as Gaussian or swap noise, we can simulate a range of conditions that the data may encounter in real-world scenarios. This process not only improves model performance but also aids in addressing the privacy challenge by transforming sensitive data into a less identifiable form.

The augmentation process typically involves the creation of a binomial mask and a corresponding noise matrix, which are applied to the data subset using the Hadamard product. The table below illustrates the types of noise added to the dataset and their intended effects:

| Noise Type | Description | Intended Effect |

|---|---|---|

| Gaussian Noise | Adds statistical noise with a normal distribution | Increases robustness to variations |

| Swap Noise | Swaps features within the same column randomly | Enhances generalizability |

| Zero-out | Sets random entries’ feature size to 0 | Addresses privacy and overfitting |

While this technique can significantly enhance data quality, it is crucial to apply noise judiciously. Uniformly treating all data with noise may lead to suboptimal outcomes, as different datasets may require tailored augmentation strategies to yield the best enhancement results.

In distributed environments with limited data annotation, our framework’s noise addition to different subsets results in high-quality data annotations, bridging the gap with centralized learning methods.

Ultimately, the goal is to leverage uniform feature distributions to generate high-quality data, thereby augmenting the dataset and enhancing the labeling effect. This approach aims to bolster the effectiveness of data labeling efforts, a critical aspect of machine learning.

Addressing Estimation and Measurement Errors

In the realm of tabular data analysis, estimation and measurement errors can significantly distort the insights derived from the data. Addressing these errors is crucial for maintaining the integrity of the analysis. Traditional imputation methods, such as mean, median, and mode, are straightforward but often fall short when dealing with complex data structures or missing mechanisms that do not follow the Missing Completely at Random (MCAR) assumption.

The non-robustness of traditional approaches necessitates the development of more sophisticated techniques to handle the nuances of special missing mechanisms and ensure the accuracy of imputations.

To enhance the robustness of imputation models, researchers have proposed various strategies. One such approach is to use Mean Absolute Error (MAE) as a balanced measure of imputation performance, which does not disproportionately penalize large errors. This is often paired with other metrics like Root Mean Square Error (RMSE) for a more comprehensive evaluation:

| Metric | Description |

|---|---|

| MAE | Measures the average magnitude of the errors in a set of predictions, without considering their direction. |

| RMSE | Provides a measure of the magnitude of errors by squaring the error values, which gives more weight to larger errors. |

The variability in methods for generating missing data and the lack of uniformity in model performance comparison underscore the need for a standardized approach to address estimation and measurement errors in tabular data.

Column Density Plots and Visualization Alternatives

In the realm of tabular data analysis, column density plots have emerged as a significant tool, especially when the focus shifts away from plotting images. These plots provide a clear visual representation of the distribution of data within individual columns, which is particularly useful for identifying patterns and anomalies. However, they may not always suffice for complex datasets with intricate missing mechanisms, where a more comprehensive analysis is necessary.

Visualization techniques are pivotal in assessing the quality of imputed missing data. While scatter plots are excellent for low-dimensional data, high-dimensional datasets require more sophisticated visualization methods to effectively evaluate imputation performance.

For instance, consider the following table summarizing the effectiveness of different visualization techniques based on the dimensionality of the data:

| Data Dimensionality | Preferred Visualization Technique |

|---|---|

| Low-dimensional | Scatter Plots |

| High-dimensional | Column Density Plots |

It is evident that as the dimensionality of data increases, the utility of scatter plots diminishes, and column density plots become more advantageous. This transition underscores the need for alternative visualization strategies that can accommodate the complexity of high-dimensional tabular data.

Tabula-py: Bridging the Gap Between PDFs and DataFrames

Automating Data Extraction with Python Scripts

The advent of libraries like tabula-py has revolutionized the way we approach data extraction from PDFs. By automating tasks through Python scripts, analysts can now convert complex PDF documents into structured DataFrames, paving the way for advanced analytics. This process typically involves the detection and extraction of tables from PDFs, which are then reconstructed into a cohesive table structure.

The input is a PDF document or a directory of multiple PDF documents, containing both paragraphs and tables. The output is a single CSV file where all tables are combined into a large structure.

However, it’s important to note that during the conversion process, certain elements such as column headings may be lost, which poses a challenge for subsequent information extraction. To illustrate the process, consider the following pipeline for table extraction:

- Table Detection

- Table Text Extraction

- Recreating Table Structure

While tabula-py is a powerful tool, it’s essential to be aware of its limitations and to have strategies in place for addressing potential data quality issues.

Converting PDF Tables to Analyzable Formats

The transition from static PDF tables to dynamic, analyzable formats is a critical step in data analysis. Tabula-py stands out by automating this process, allowing for the seamless conversion of tables into pandas DataFrames or CSV/TSV files. This capability is essential for integrating tabular data into analytical workflows where Python’s powerful libraries can be leveraged for advanced analytics.

The conversion process typically involves the following steps:

- Identifying the table region within the PDF.

- Extracting the text and numerical data from the table.

- Recreating the table structure in a DataFrame or CSV format, ensuring that the data is structured correctly for analysis.

While Tabula-py facilitates a smooth conversion, it’s important to note that certain limitations exist. For instance, column headings may not always be preserved, especially when dealing with unbordered tables. This can pose challenges for subsequent information extraction and analysis.

The table below illustrates a simplified example of how data might appear before and after conversion using Tabula-py:

| Original PDF Table | Extracted DataFrame |

|---|---|

| Fig 1: Original | Fig 3: Tabula-py |

Despite these challenges, the ability to convert PDF tables into formats that are ready for analysis is invaluable. It enables researchers and analysts to harness the full potential of their data, which would otherwise be trapped within static documents.

Limitations and Considerations in Data Conversion

While tabular data conversion tools like Tabula-py offer significant advantages in automating the extraction process, they are not without their limitations. Certain imputation techniques struggle with non-numerical data, revealing a gap in handling diverse data types. Real-world datasets often contain a mix of numerical, categorical, and even binary or ordinal data, which can pose challenges for traditional machine learning and statistical-based models.

The computational resources required for accurate data conversion can be substantial, especially when dealing with large datasets or complex transformations. This can lead to a trade-off between the interpretability of the methods and the utility of the model. It’s crucial to weigh these considerations when choosing the right tool for data analysis tasks.

The efficiency of traditional approaches can decrease with the scale of data, impacting their popularity in contemporary data analysis.

Furthermore, the special missing mechanisms that are inherent in different data formats necessitate comprehensive solutions. The following table outlines some key considerations to keep in mind when converting tabular data:

| Consideration | Impact on Conversion |

|---|---|

| Data Type Limitations | Difficulties with non-numerical data |

| Computational Resources | Increased demand for large-scale data |

| Missing Mechanisms | Need for tailored solutions |

In summary, while tools like Tabula-py bridge the gap between static PDFs and dynamic DataFrames, it’s important to be aware of their limitations and the potential need for additional resources or specialized techniques.

Experimenting with Tabular Data Extraction Tools

Accuracy and Performance of Tabula-py

Tabula-py serves as a powerful tool for converting PDF tables into pandas DataFrames, enabling users to harness the full potential of Python for data analysis. The accuracy of tabula-py in extracting PDF tables is on par with that of tabula-java and the tabula app‘s GUI tool. To effectively assess the performance of tabula-py, it is advisable to conduct experiments using the tabula app.

When using tabula-py, the process involves inputting a PDF document and outputting a CSV file where all tables are combined. However, a notable issue is the loss of column headings during conversion, which can be critical for subsequent information extraction. The read_pdf() function offers an alternative by returning a list of DataFrames, each representing a table within the document. It is observed that table headers are more accurately recognized in bordered tables.

While tabula-py automates the task of data extraction, it is essential to be aware of its limitations, such as the potential loss of column headings and incomplete column extraction, especially in unbordered tables.

To enhance the performance of tabula-py, it is recommended to assign specific tasks to dedicated pipelines, each optimized for accuracy in their respective areas. This approach can help mitigate the drawbacks and improve the overall effectiveness of the data extraction process.

Comparative Analysis of Tabula-py and Other Extraction Methods

When evaluating the performance of Tabula-py against other table extraction libraries, such as Camelot, it’s crucial to consider the entire data extraction pipeline. This includes table detection, text extraction, and the recreation of the table structure. Tabula-py, functioning as a wrapper for tabula-java, requires Java and is adept at converting PDF tables into pandas DataFrames or CSV/TSV files.

Camelot, on the other hand, is known for its precision in bordered tables but may fall short when dealing with unbordered tables. Both tools have their own set of advantages and limitations, which are highlighted in the following table:

| Feature | Tabula-py | Camelot |

|---|---|---|

| Table Detection | Good | Excellent |

| Bordered Tables | Accurate | Very Accurate |

| Unbordered Tables | Less Reliable | Problematic |

| Output Formats | DataFrame, CSV/TSV | CSV |

While Tabula-py excels in automating tasks through Python scripts and advanced analytics post DataFrame conversion, it has been observed that column headings can be lost during the extraction process, rendering it less suitable for certain types of information extraction. The read_pdf() function in Tabula-py returns a list of DataFrames, each corresponding to a recognized table, with better header recognition in bordered tables.

Case Studies: From PDF Documents to Structured Data

The transition from static PDF documents to structured, analyzable data sets is a critical step in data analysis. Case studies demonstrate the practical applications of tools like Tabula-py in various industries. For instance, a retail company may use these tools to extract structured sales transaction data from PDF reports, which can then be analyzed for insights into consumer behavior.

The process often involves Optical Character Recognition (OCR) and sophisticated algorithms to recreate table structures, ensuring data integrity.

The following table summarizes key aspects of a case study where tabular data was extracted from a PDF document:

| Aspect | Description |

|---|---|

| Document Type | Sales Transaction Report |

| Data Type | Structured (Product IDs, Quantities, Prices) |

| Extraction Tool | Tabula-py |

| Post-Extraction | Data Analysis for Consumer Behavior Insights |

In addition to retail, sectors such as healthcare, finance, and logistics also benefit from these advancements, leveraging the extracted data for improved decision-making and strategic planning.

The Future of Tabular Data Analysis

Innovations in Self-Supervised Labeling Techniques

The advent of self-supervised learning has revolutionized the way we approach data labeling, particularly in the realm of tabular data. By leveraging pseudo-labels generated from the data itself, this technique reduces the need for extensive manual labeling, which is both time-consuming and costly. The effectiveness of self-supervised learning hinges on the availability of large and diverse datasets, where the intrinsic structures within the data can be utilized to create meaningful representations.

The integration of self-supervised learning into tabular data analysis has the potential to transform industries that rely heavily on structured data, such as finance and healthcare. These sectors often face challenges with traditional data augmentation methods, which are not always suitable for tabular formats.

In practice, self-supervised learning can turn the privacy challenge into a data labeling opportunity. By segmenting data and introducing noise, we can augment subsets of data, enhancing the overall quality and utility for analysis. This innovative approach not only improves the labeling process but also contributes to the robustness of the models trained on such data.

The table below summarizes key aspects of self-supervised learning in tabular data:

| Aspect | Description |

|---|---|

| Data Quality | Relies on high-quality, extensive datasets with diverse features. |

| Learning Cues | Utilizes pseudo-labels and intrinsic data structures. |

| Applicability | Versatile across various domains and tasks. |

| Innovation | Segmentation and noise addition to augment data subsets. |

Our research demonstrates the practicality of self-supervised learning in tabular data contexts, showing promising results across multiple public datasets. This underscores the potential of self-supervised techniques to become a mainstay in data analysis workflows.

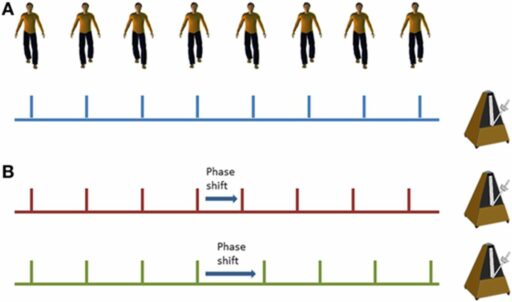

The Impact of Federated Learning Environments on Tabular Data

Federated Learning (FL) environments are revolutionizing the way we handle tabular data, particularly in scenarios where data privacy is paramount. By distributing the computation across multiple clients, FL allows for simultaneous model training while maintaining data confidentiality. This approach is particularly beneficial for organizations that need to collaborate without sharing sensitive information.

The introduction of self-supervised learning techniques within FL environments, such as the TabFedSL framework, has further enhanced the quality of tabular data analysis. These techniques enable the use of unlabeled data, which is often abundant but underutilized due to the challenges of manual labeling. The TabFedSL framework demonstrates that it is possible to achieve high-quality model training by leveraging the vast amounts of unlabeled data available across distributed networks.

The success of federated learning in tabular data analysis hinges on the ability to effectively combine self-supervised learning with secure, distributed data processing.

Experimental results have shown that frameworks like TabFedSL can achieve performance comparable to traditional methods, even in complex datasets such as Mnist, UCI Adult Income, and UCI BlogFeedback. This is a testament to the efficacy of federated learning in diverse tabular settings.

Emerging Trends and Tools in Tabular Data Analysis

As the landscape of tabular data analysis continues to evolve, new trends and tools are emerging that promise to revolutionize the way we handle and interpret structured datasets. The integration of representation learning techniques for handling missing data is one such trend that is gaining traction. Unlike traditional deletion or imputation methods, these advanced techniques offer nuanced approaches to understanding and addressing the complexities of missing patterns in tabular data.

In the realm of data augmentation, the indiscriminate addition of noise to enhance datasets is being reevaluated. Tailored noise addition strategies are being developed to optimize the efficacy of data augmentation, ensuring that the quality of tabular data is not compromised. This nuanced approach to data quality improvement is critical for maintaining the integrity of analysis.

The issue of missing patterns is pervasive and occurs across various data types. However, the focus on tabular datasets is due to their prevalence and significance in diverse fields, necessitating dedicated tools and methods for effective analysis.

Emerging tools are also addressing the challenges of estimation and measurement errors, which can significantly impact the quality of tabular data. The table below summarizes some of the key areas of focus in the development of new tabular data analysis tools:

| Focus Area | Description |

|---|---|

| Missing Data Handling | Advanced techniques for dealing with missing patterns in data. |

| Data Augmentation | Tailored noise addition strategies to maintain data quality. |

| Error Correction | Methods to address estimation and measurement errors. |

These advancements are setting the stage for more robust and sophisticated analysis, enabling researchers and analysts to extract deeper insights from tabular data with greater accuracy and efficiency.

Conclusion

Throughout this article, we have explored the multifaceted nature of tabular data and the various frameworks, tools, and methodologies designed to enhance its analysis and interpretation. From the innovative TabFedSL framework to the practical utility of Tabula-py, we have seen how these advancements address the challenges inherent in tabular data analysis, such as noise disruption, missing patterns, and the extraction of high-quality data. The insights provided underscore the importance of selecting the right tools and approaches for specific data types and analysis goals. As we continue to amass vast quantities of structured data, the ability to efficiently harness and analyze tabular data will remain a cornerstone of informed decision-making and strategic insights across diverse domains. The future of data analysis is bright, with continuous improvements and innovations paving the way for more sophisticated and accessible data handling techniques.

Frequently Asked Questions

What is tabular data and why is it important in digital analysis?

Tabular data is a structured format that organizes information into rows and columns, making it easier to understand and analyze. It’s crucial for efficient data interpretation and analysis in various fields such as finance and scientific research.

What are the challenges in transforming raw table data?

Transforming raw table data involves extracting information that can be used for analytics, which can be challenging due to the loss of column headings, complexity of missing patterns, and the need to maintain data integrity.

How can tabular data quality be improved for analysis?

Improving tabular data quality can involve techniques like data augmentation and noise addition, addressing estimation and measurement errors, and using visualization tools like column density plots to understand complex missing mechanisms.

What is Tabula-py and how does it help with PDF data extraction?

Tabula-py is a Python library that acts as a wrapper for tabula-java, allowing for the automated extraction of table data from PDFs into pandas DataFrames or CSV/TSV files, facilitating further analysis.

How does Tabula-py compare to other data extraction tools?

Tabula-py is known for its accuracy, matching that of tabula-java and the tabula app’s GUI tool. However, it’s recommended to compare its performance with other extraction methods through experimentation.

What future advancements are expected in tabular data analysis?

Future advancements may include innovations in self-supervised labeling techniques like TabFedSL, the impact of federated learning environments, and the development of new tools and trends for more efficient tabular data analysis.