Table of Contents

In the contemporary data-driven landscape, quantitative data emerges as a cornerstone of empirical research and informed decision-making. This article, ‘Unlocking the Meaning of Quantitative Data: An Integral Definition,’ delves into the multifaceted nature of quantitative data, exploring its definition, collection methods, analysis techniques, and practical applications. It also addresses the challenges and ethical considerations inherent in quantitative research. By dissecting these elements, the article aims to provide a comprehensive understanding of quantitative data’s role in various domains.

Key Takeaways

- Quantitative data is essential for objective measurement and statistical analysis, helping to convert complex phenomena into actionable insights.

- Effective collection methods, including surveys, observational studies, and secondary data, are crucial for acquiring high-quality quantitative data.

- Analysis techniques such as descriptive and inferential statistics, as well as exploratory data analysis, enable the interpretation of data for decision-making.

- Quantitative data has significant applications in fields like financial risk management and conversion rate optimization, influencing strategy and operations.

- Addressing data quality, ethical considerations, and proper statistical interpretation are key challenges in quantitative research to ensure reliability and validity.

The Essence of Quantitative Data

Defining Quantitative Data

Quantitative data is the backbone of data-driven decision-making. It encompasses all information that can be quantified and expressed numerically, making it essential for statistical analysis and objective measurement. Quantitative data is numerical and measurable, and it is used to formulate facts and uncover patterns in research.

Quantitative data can be classified into two main types:

- Discrete Data: Countable items, such as the number of students in a class.

- Continuous Data: Measurements that can take on any value within a range, like temperature.

Quantitative data serves as a critical tool for researchers and analysts, providing a foundation for making informed decisions and drawing reliable conclusions.

Understanding the nature of quantitative data is crucial for its effective collection, analysis, and interpretation. It is the measurable aspect of data that allows for the precise calculation of differences and trends, and the subsequent application of statistical methods to understand and utilize these findings.

Distinguishing Between Quantitative and Qualitative Data

Understanding the distinction between quantitative and qualitative data is fundamental to data analysis. Quantitative data is numerical, representing quantities and measurements that can be counted or measured. It is often presented in structured formats such as tables or charts. For example, consider the following table showing the number of students in different classes:

| Class | Number of Students |

|---|---|

| A | 23 |

| B | 30 |

| C | 28 |

In contrast, qualitative data is descriptive and subjective, capturing the qualities and characteristics that are not easily reduced to numbers. It includes information such as opinions, behaviors, and experiences. This type of data is typically collected through interviews, observations, or open-ended survey questions.

The ability to differentiate these data types is crucial for data scientists who work with vast amounts of data to extract insights and inform investors. They use various methods and algorithms to analyze data for decision-making and benefit organizations.

While quantitative data can be easily standardized and compared, qualitative data provides context and depth to the numerical insights. Both types of data complement each other and are often used together to provide a comprehensive understanding of a research question or business problem.

Common Types and Examples of Quantitative Data

Quantitative data is the backbone of data-driven decision making, providing a numerical foundation for analysis. It includes objective and observable information stated in specified units, such as height, temperature, income, sales figures, population size, and test scores. This data can be categorized into two main types:

- Discrete Data: Countable items, like the number of students in a class.

- Continuous Data: Measurements that can take on any value within a range, like temperature.

Here’s a succinct table illustrating examples of each type:

| Data Type | Examples |

|---|---|

| Discrete Data | Number of employees, test scores |

| Continuous Data | Height in centimeters, annual rainfall in millimeters |

Quantitative data is not just about numbers; it’s about the story those numbers tell and the insights they reveal.

Understanding these types can help in selecting the appropriate methods for data collection and analysis, ensuring that the data serves its intended purpose effectively.

Methods of Quantitative Data Collection

Surveys and Questionnaires

Surveys and questionnaires are foundational tools in the arsenal of quantitative data collection. They are designed to extract quantifiable data directly from subjects, providing a structured approach to gathering insights. The process typically involves a series of questions that participants answer, which can then be analyzed for patterns and trends.

For instance, a local business owner aiming to enhance their store’s layout and product offerings might deploy a survey to understand customer shopping behaviors and preferences. The survey could include questions on product variety, store layout, pricing, and the overall shopping experience. This method allows for a systematic collection of data that can be crucial for making informed decisions.

The objective of using surveys and questionnaires is to capture data that is both reliable and relevant to the research goals.

The table below illustrates a simplified example of how survey data might be structured:

| Question | Response Option 1 | Response Option 2 | Response Option 3 |

|---|---|---|---|

| Product Variety Satisfaction | Very Satisfied | Satisfied | Not Satisfied |

| Store Layout Appropriateness | Excellent | Good | Needs Improvement |

| Pricing Fairness | Fair | Somewhat Fair | Unfair |

By analyzing the responses, a business owner can identify areas of improvement and tailor their strategies to better meet customer needs.

Observational and Experimental Data

Observational and experimental methods are pivotal in the realm of quantitative data collection. Observational data is gathered without interference, where researchers record behaviors or occurrences as they naturally happen. This method is often used in descriptive research, which aims to accurately describe the current state of a variable or phenomena.

In contrast, experimental data is obtained through the deliberate manipulation of variables to observe the effects on certain outcomes. This approach is central to analytical research, which seeks to understand causal relationships and underlying patterns.

Both methods have distinct advantages and limitations:

| Method | Advantage | Limitation |

|---|---|---|

| Observational | Real-world relevance | Lack of control |

| Experimental | Causal insights | Artificial setting |

The choice between observational and experimental methods hinges on the research objectives and the nature of the question at hand.

Utilizing Secondary Data Sources

Utilizing secondary data sources is a cost-effective and time-efficient approach to quantitative research. Secondary data refers to information that has been collected by someone else for a different purpose, but can be repurposed for new research questions. This can include data from websites, databases, and official records.

When opting for secondary data, researchers must develop a strategic approach to database search, ensuring the data’s relevance and accuracy. The following table illustrates a simplified database search strategy:

| Step | Action |

|---|---|

| 1 | Identify relevant databases and websites |

| 2 | Define search terms and parameters |

| 3 | Review data availability and access rights |

| 4 | Evaluate the data’s suitability for research objectives |

Secondary data analysis can reveal patterns and insights that may not be apparent from primary data alone. It allows researchers to build upon existing knowledge and explore new dimensions of a topic without the need for extensive data collection efforts.

However, it is crucial to consider the original context in which the data was collected. Researchers must assess the quality, reliability, and applicability of the data to their specific research question. This often involves a critical review of the methodology used in the original data collection.

Quantitative Data Analysis Techniques

Descriptive Statistics: Mean, Median, Mode, and Standard Deviation

Descriptive statistics serve as the cornerstone of quantitative analysis by summarizing data through key figures. The mean, median, mode, and standard deviation are fundamental metrics that provide insights into the central tendencies and variability of a dataset.

- Mean: Defined as the arithmetic average of all observations in the data set.

- Median: Defined as the middle value in the data set arranged in ascending order.

- Mode: The most frequently occurring value(s) in the dataset.

- Standard Deviation: A measure of the dispersion or spread of the data points around the mean.

These statistics are essential for understanding the overall shape of the data distribution and are often the first step in data analysis. Tools like Microsoft Excel can facilitate these calculations, allowing for a quick interpretation of the data’s condition or trend.

Descriptive statistics not only summarize the data but also lay the groundwork for further analysis, such as inferential statistics, which may reveal deeper insights or causal relationships.

Inferential Statistics: Hypothesis Testing and Regression Analysis

Inferential statistics empower researchers to make predictions and draw conclusions about a population based on sample data. Hypothesis testing is a systematic method used to determine whether there is enough evidence in a sample of data to infer that a certain condition is true for the entire population. Regression analysis, on the other hand, helps in understanding the relationship between dependent and independent variables.

The true power of regression analysis lies in its ability to quantify the strength of relationships and predict outcomes, making it an indispensable tool in many fields.

For instance, in a marketing study aiming to understand the impact of advertising on sales, the following table might be used to present initial data:

| Week | Ad Spend (USD) | Sales (USD) |

|---|---|---|

| 1 | 10,000 | 50,000 |

| 2 | 15,000 | 65,000 |

| 3 | 20,000 | 80,000 |

| 4 | 25,000 | 100,000 |

After collecting and tabulating the data, statistical tests can be applied to determine if the increase in ad spend significantly correlates with sales figures. The steps involved in this process typically include:

- Formulating a hypothesis, such as "Increased ad spend will lead to higher sales."

- Collecting and analyzing sample data.

- Performing regression analysis to examine the relationship between ad spend and sales.

- Interpreting the results to draw conclusions or make predictions.

Challenges arise when attempting to ensure that the sample data accurately represents the population, and when selecting the appropriate statistical methods to analyze the data. These steps are critical in avoiding erroneous conclusions that could misguide decision-making processes.

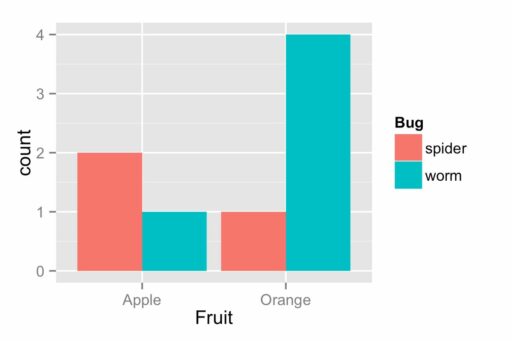

Exploratory Data Analysis: Uncovering Patterns and Insights

Exploratory Data Analysis (EDA) is a critical phase in the data analysis process, where the primary goal is to uncover patterns, anomalies, and insights within large sets of data. This process is less about confirming hypotheses and more about modeling the data in various ways to discover what it can tell us.

EDA is an iterative cycle of hypothesis generation, data exploration, and discovery that provides a deeper understanding of data’s underlying structures and variables.

The steps involved in EDA often include:

- Visualizing the data to identify trends and relationships.

- Segmenting data based on criteria like customer segments or geography.

- Applying statistical models to discern patterns and outliers.

- Communicating findings through effective data representation.

For instance, a business might analyze revenue and cost data to identify growth trends across different customer personas. A simplified example of such an analysis could be presented in a table:

| Customer Persona | Q1 Revenue | Q2 Revenue | Growth Rate |

|---|---|---|---|

| Persona A | $120,000 | $150,000 | 25% |

| Persona B | $85,000 | $95,000 | 11.8% |

By exploring these quantitative insights, organizations can make data-driven decisions that are critical for strategic planning and operational efficiency.

Applications of Quantitative Data in Decision Making

Role of Quantitative Data in Financial Risk Management

In the realm of financial risk management, quantitative data serves as the backbone for making informed decisions. Quantitative Analysts, or Data Modelers, harness this data to predict market trends, identify potential investments, and evaluate risks. Their expertise in statistical and mathematical modeling is crucial for developing strategies that can withstand market volatilities.

The precision of quantitative analysis allows for the identification of risk factors and the assessment of their potential impact on investment portfolios.

Financial institutions rely on various quantitative measures to gauge risk. Below is a table illustrating some key risk metrics:

| Risk Metric | Description |

|---|---|

| VaR (Value at Risk) | Estimates the maximum loss over a specific time period at a given confidence level |

| Expected Shortfall | Measures the expected loss in the worst-case scenario beyond the VaR threshold |

| Beta | Indicates the volatility of an investment relative to the market |

Understanding these metrics and their interplay is essential for risk managers to protect assets and optimize returns. The integration of quantitative data into risk management frameworks not only mitigates potential losses but also uncovers opportunities for growth.

Improving Conversion Rate Optimization (CRO) with Quantitative Insights

Quantitative data serves as a compass in the realm of Conversion Rate Optimization (CRO), providing clear direction for enhancing user experience and increasing conversions. Metrics such as page views, bounce rates, and average session durations are not just numbers; they are indicators of user engagement and areas for improvement.

By analyzing these metrics, businesses can pinpoint where users disengage and test changes to overcome these hurdles. For instance, a high bounce rate might suggest the need for more captivating content or a more intuitive navigation structure.

Leveraging both quantitative and qualitative data can transform the CRO process from guesswork to a strategic, data-driven approach.

A/B testing is a quintessential tool in this process, allowing for empirical comparisons between different website versions. Here’s a simple table illustrating a hypothetical A/B test result:

| Version | Conversion Rate | Bounce Rate |

|---|---|---|

| A | 2.5% | 40% |

| B | 3.0% | 35% |

This table shows how a minor change can lead to a significant impact on user behavior and conversion rates. By continuously monitoring and analyzing quantitative data, businesses can make informed decisions that lead to better CRO outcomes.

Descriptive Research and Its Impact on Business Strategies

Descriptive research plays a pivotal role in the realm of business strategies by providing a comprehensive snapshot of current market conditions and consumer behaviors. It offers a foundation for businesses to make data-driven decisions that can lead to enhanced customer experiences and optimized product offerings.

For instance, consider the impact of descriptive research on a local business owner’s strategy:

- Objective: To describe the shopping behaviors and preferences of customers.

- Data Collection: Utilizing surveys, interviews, and observational studies.

- Outcome: Informed decisions on store layout, product stocking, and customer experience improvements.

Descriptive research is not about predicting the future; it’s about understanding the present to make better decisions for tomorrow.

By focusing on the ‘here and now,’ businesses can leverage descriptive research to gain insights into the discrete probability distribution of consumer preferences, which in turn informs strategic planning. This approach is distinct from inferential research, which seeks to predict future trends but may not provide the granular detail necessary for immediate action.

Challenges and Ethical Considerations in Quantitative Research

Addressing Data Quality and Reliability Issues

Ensuring the integrity of analysis is crucial, as it is highly dependent on the quality of the data collected. Poor quality data can lead to inaccurate and unreliable results, which is why data cleaning is an essential step in the quantitative research process. This involves addressing key elements of quality such as accuracy, consistency, and completeness.

To systematically improve data quality, analysts often follow a set of steps:

- Identification of data quality issues

- Data validation and verification

- Data cleansing and transformation

- Continuous data quality assessment

By proactively addressing data quality and reliability issues, researchers can significantly enhance the validity of their findings and the credibility of their analysis.

It is important to recognize that each method of data collection and analysis has its own set of strengths and weaknesses. Analysts must carefully consider these when designing their approach to ensure that the data is not only clean but also relevant and applicable to the research objectives.

Ethical Implications of Data Collection and Usage

The ethical landscape of quantitative research is complex, with data collection and usage at its core. Ensuring the ethical integrity of data practices is paramount for researchers and organizations alike. Ethical considerations must be woven into the fabric of the research process, from the initial design to the dissemination of findings.

- Evaluate training datasets for representativeness to avoid bias.

- Implement regular auditing procedures for continuous bias assessment.

- Navigate complex data privacy regulations to protect participant information.

- Maintain data quality and integrity for reliable analysis.

The responsibility of handling data ethically extends beyond compliance with regulations; it encompasses a commitment to fairness, privacy, and security throughout the research lifecycle.

Addressing these ethical implications is not just about adhering to legal standards; it’s about fostering trust and credibility in research. As data becomes increasingly integral to decision-making, the ethical use of data stands as a pillar of responsible research and analysis.

Overcoming Misinterpretation and Misuse of Statistical Data

The integrity of quantitative research hinges on the accurate interpretation and ethical use of statistical data. Statistical negligence can lead to serious consequences, such as flawed decision-making and loss of credibility. To combat this, it is crucial to adhere to stringent reporting standards and transparent methodologies.

- Identifying inaccuracies or errors in the data.

- Rectifying issues such as missing values, duplicate records, or incorrect entries.

- Standardizing data formats to ensure consistency across datasets.

Data cleaning is not only a preparatory step but a continuous necessity throughout the data analysis process. It lays the foundation for reliable analytics and trustworthy conclusions.

Ensuring data quality and integrity is foundational for reliable analysis. This requires a meticulous approach to data management and a commitment to ongoing education in the field.

By fostering a culture of accuracy and accountability, organizations can mitigate the risks associated with misinterpretation and misuse of statistical data. This involves not only technical proficiency but also ethical vigilance and clear communication of findings.

Conclusion

In the realm of data analysis, quantitative data serves as the backbone of objective decision-making and insightful conclusions. Throughout this article, we have explored the multifaceted nature of quantitative data, from its definition and types to the methodologies of collection and analysis. We’ve seen how quantitative analysts harness this data in finance, how it informs conversion rate optimization (CRO) in digital marketing, and the crucial role it plays in research to establish patterns and draw statistically sound inferences. The tools and examples provided underscore the importance of a robust quantitative standard, ensuring that the data’s story is not just heard but understood. As we continue to navigate an increasingly data-driven world, the ability to unlock the meaning of quantitative data remains an integral skill for professionals across various industries, ultimately empowering them to translate numbers into actionable knowledge.

Frequently Asked Questions

What is quantitative data and how is it different from qualitative data?

Quantitative data refers to numerical information that can be measured and quantified. It includes values such as time, distance, and speed. Unlike qualitative data, which is descriptive and subjective, quantitative data is objective and can be used to establish patterns and draw statistically valid conclusions.

What are some common types of quantitative data?

Common types of quantitative data include continuous data like temperature or height, discrete data such as the number of students in a class, and categorical data which can be numerical but represent categories like a 1 for male and 2 for female.

How is quantitative data collected?

Quantitative data can be collected through various methods such as surveys and questionnaires, observational studies, experiments, and utilizing secondary data sources like databases and previously conducted studies.

What are the main quantitative data analysis techniques?

Key quantitative data analysis techniques include descriptive statistics (mean, median, mode, standard deviation), inferential statistics (hypothesis testing, regression analysis), and exploratory data analysis, which helps uncover patterns and insights within the data.

How does quantitative data assist in decision making?

Quantitative data aids in decision making by providing empirical evidence that can be used to assess financial risks, optimize conversion rates, and develop strategic business plans. It enables decision-makers to rely on statistical analysis rather than intuition.

What are some challenges and ethical considerations in quantitative research?

Challenges in quantitative research include ensuring data quality and reliability, as well as addressing the potential for misinterpretation or misuse of statistical data. Ethical considerations involve the responsible collection, storage, and use of data, as well as respecting the privacy and confidentiality of research participants.