Table of Contents

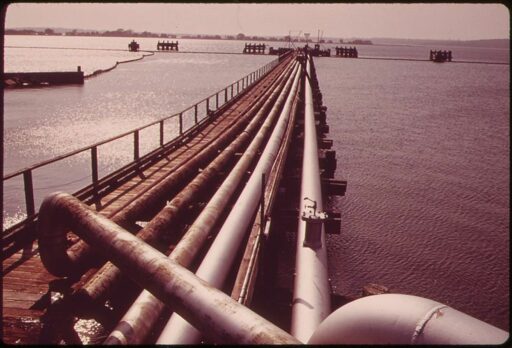

As the digital age progresses, the importance of efficient data management and the construction of robust data pipelines becomes increasingly vital. Data pipelines serve as the lifeline of modern enterprises, enabling the seamless flow and transformation of data from its source to the point of use. With the right tools and practices, organizations can ensure data integrity, enhance productivity, and gain strategic insights. This article explores the future of data management, focusing on the development of efficient data pipelines that are scalable, reliable, and integrated with advanced analytics platforms.

Key Takeaways

- Selecting the right data pipeline tools is crucial for optimizing workflows and supporting data-driven innovation within organizations.

- Best practices in data management emphasize the importance of data governance, security, and real-time processing for actionable insights.

- Cloud-based data pipelines are gaining popularity due to their scalability, flexibility, and alignment with the shift towards cloud computing.

- Reliability in data pipelines is achieved through high scalability, fault tolerance, and proactive operational monitoring.

- Integration with advanced analytics and data platforms enhances data visibility and enables organizations to derive deeper insights from their data.

Selecting the Right Data Pipeline Tools

Key Features for Optimizing Workflows

In the realm of data management, the efficiency of data pipelines is paramount. Selecting the right tools is crucial for optimizing workflows and enhancing productivity. Data professionals must prioritize features that provide deep visibility into the pipeline’s performance and health. This includes monitoring tools and dashboards for tracking key metrics, detecting issues, and fine-tuning performance.

Efficient workflow orchestration is another cornerstone of effective data pipelines. Tools that facilitate scheduling, resource allocation, and systematic management of data jobs are essential. They ensure that complex workflows are executed with precision and reliability, which is vital for maintaining the integrity of data processes.

The integration of robust automation features and comprehensive monitoring capabilities is indispensable. These elements streamline the development and operation of data pipelines, allowing for real-time anomaly detection and issue resolution.

Ultimately, the toolset chosen should align with the organization’s strategic data initiatives, ensuring that the data estate is managed with the utmost efficiency and accuracy.

The Role of Native Data Integration Capabilities

Native data integration capabilities are essential for efficient database management and can significantly streamline the process of data ingestion and maintenance. With tools like Data Pipelines, organizations can leverage a drag-and-drop interface that reduces the need for extensive coding, making the integration process more accessible to a wider range of users.

The versatility of these tools is evident in their ability to connect to a multitude of data sources and destinations, such as databases, cloud storage, and message queues. This adaptability is crucial for addressing various data integration scenarios.

When selecting a data pipeline tool, it’s important to consider its compatibility with existing infrastructure, support for diverse data formats, and the choice between cloud-based or on-premises solutions.

Understanding the role of data integration tools in data engineering is also vital. They are the backbone of ETL processes, ensuring that data from disparate sources can be unified for analysis and business intelligence. Knowledge of cloud computing platforms further enhances a Data Engineer’s ability to manage and integrate data effectively.

Automation and Orchestration in Data Pipelines

The integration of automation and orchestration tools within data pipelines is a transformative shift, enhancing not only the efficiency but also the reliability of data workflows. By automating repetitive tasks and orchestrating complex workflows, these tools significantly reduce manual intervention and the potential for human error.

Automation features such as workflow orchestration, scheduling, and error handling are essential for a streamlined pipeline operation. They ensure that data is processed accurately and timely, which is critical for maintaining data integrity and making informed business decisions.

Monitoring and alerting capabilities are equally important. They provide visibility into the pipeline’s performance and enable prompt issue resolution. Here’s a list of key benefits:

- Streamlined development and deployment

- Reduced manual efforts

- Enhanced efficiency and reliability

- Real-time anomaly detection and troubleshooting

Selecting the right toolset that offers these capabilities is crucial for building efficient data pipelines that can adapt to the evolving demands of Big Data and machine learning applications.

Best Practices for Enterprise Data Management

Data Governance and Security

In the digital age, data governance and security are paramount for maintaining the trust of stakeholders and ensuring compliance with evolving regulations. Enhanced privacy measures are a cornerstone of robust data governance frameworks, which include defining policies and controls around data access and protection. These frameworks are essential for upholding the confidentiality, integrity, and availability of data, thereby mitigating privacy risks.

Organizations are increasingly investing in data governance to comply with stringent global regulations such as GDPR and CCPA. Key areas of focus include data lineage, cataloging, and quality management, which are crucial for maintaining data integrity and providing access to reliable data.

The emphasis on data governance and security is not just about compliance; it’s about fostering transparency and accountability in data handling practices.

Here are some of the core practices in data governance that help enhance privacy and security:

- Data classification

- Encryption

- Anonymization

By implementing these practices, organizations can minimize the risk of data breaches and unauthorized disclosures, enhancing privacy protection and maintaining trust.

Efficiency and Accuracy with Augmented Data Management

Augmented Data Management (ADM) is revolutionizing the way enterprises handle their data, emphasizing both efficiency and accuracy. Automated data pipelines and quality checks are at the forefront of this transformation, ensuring that data is not only processed quickly but also maintains high integrity. Data lineage tracing further contributes by providing clear visibility into the data’s journey, which is essential for troubleshooting and compliance.

- Automated tiering optimizes data storage based on value and usage.

- AI-driven governance tools enforce quality, security, and compliance.

- Data observability tools are crucial for maintaining data health.

By integrating these ADM tools, organizations can significantly reduce the time and effort required for data management tasks, while simultaneously enhancing the reliability of their data analytics.

The rise of Retrieval-Augmented Generation (RAG) and domain-specific solutions highlights the importance of well-organized data. Data engineers are key in preparing data for AI tools and vector databases, which in turn, power precise and responsive applications. This synergy between ADM and AI is not just a trend but a strategic imperative for businesses aiming to stay competitive in the data-driven market.

The Importance of Real-time Data Processing

In the era of instant gratification, real-time data processing is not just a luxury but a necessity for businesses aiming to stay competitive. It allows for the immediate analysis and action upon data, facilitating proactive decision-making and swift responses to market changes. However, the complexity of implementing such systems should not be underestimated.

- Complexity of Real-time Processing: Event ordering, event time processing, and handling late or out-of-order events are just a few of the challenges developers face.

- Data Consistency and Integrity: Maintaining consistency and integrity is crucial, yet challenging in a real-time environment.

- Performance Considerations: Optimizing data pipelines for performance is essential to handle large data volumes and complex logic.

- Cost: The investment in real-time processing infrastructure must be justified by the benefits it brings.

Furthermore, the integration of real-time data processing is pivotal for organizations that require timely insights. This capability supports a wide range of use cases, from real-time analytics to dynamic monitoring and decision-making.

The Rise of Cloud-Based Data Pipelines

Scalability and Flexibility in the Cloud

The cloud era has ushered in a transformative approach to data pipeline architecture. Cloud-based pipelines are designed for high scalability, allowing businesses to adjust resources with precision to meet fluctuating demands. This adaptability is crucial for handling the ebb and flow of data workloads, especially in an environment where data volumes are continuously growing.

- Separation of storage and compute: Cloud platforms enable the independent scaling of storage and compute resources, enhancing performance while maintaining cost efficiency.

- Resource elasticity: The ability to scale resources up or down on-demand ensures that organizations only pay for what they use, avoiding unnecessary expenses.

- Integration with cloud services: Leveraging the vast array of tools provided by cloud giants like AWS, GCP, and Azure simplifies the management of big data.

The shift towards cloud computing is not just a trend; it’s a strategic move that aligns with the need for enabling flexibility and accessibility in data management. As data pipelines become more integrated with cloud services, the benefits of real-time data processing and storage become increasingly apparent, offering a competitive edge to those who adopt them.

Aligning with the Shift Towards Cloud Computing

As the industry leans more toward cloud solutions, data management strategies are evolving to embrace the cloud’s inherent benefits. Cloud computing platforms like AWS, GCP, and Azure have become indispensable for data engineers, offering not just scalable resources but also a comprehensive suite of tools for big data handling. This trend is not only about scalability and accessibility; it’s about integrating real-time data processing capabilities that enable organizations to analyze and act upon data as it flows through their pipelines.

The adoption of cloud technologies is closely tied to the broader shift towards cloud computing, which emphasizes the importance of flexibility in data processing and storage. For data professionals, this means that a solid understanding of cloud fundamentals, along with DevOps and CI/CD practices, is increasingly crucial. Certifications in cloud computing can significantly enhance one’s marketability in this rapidly changing landscape.

Embracing cloud computing is not just a technical upgrade; it’s a strategic move that aligns with the future of data management, ensuring that organizations remain agile and competitive in a data-driven world.

DataOps Adoption and Market Trends

The DataOps methodology is rapidly gaining traction as organizations strive for greater efficiency and collaboration in data management. Integrating DataOps principles is not just a trend; it’s becoming a standard practice for fostering synergy between data teams, developers, and operational staff, leading to a more streamlined data lifecycle.

The adoption of DataOps is indicative of a market that values agility and responsiveness in data strategies, ensuring that data pipelines are not only robust but also adaptable to the evolving needs of businesses.

The global Data Pipeline market is experiencing transformative trends, primarily driven by the increasing volume and complexity of data. Here are some key factors:

- The shift towards cloud-based data pipelines for enhanced flexibility and scalability.

- The integration of real-time data processing capabilities to support instantaneous analytics.

- The need for scalable and secure data integration processes to handle growing data demands.

These trends reflect a broader market movement towards innovative solutions that can accommodate the dynamic nature of data flows and the strategic importance of real-time decision making.

Ensuring Data Pipeline Reliability

High Scalability and Performance

In the realm of data management, high scalability is a cornerstone for ensuring that data pipelines can handle the ebb and flow of data demands. A robust data pipeline architecture must be capable of scaling both vertically and horizontally to meet the needs of an organization’s fluctuating data volumes and workloads. This includes the ability to scale across different geographic locations and adapt to seasonal spikes in data traffic.

The separation of storage and compute resources is a key design feature that allows for independent scaling. This not only boosts performance but also drives cost efficiency by preventing the need for over-provisioning. For instance, MongoDB exemplifies this with its automatic indexing and native aggregation features, which facilitate rapid queries and efficient analytics while maintaining horizontal scalability.

Ensuring high availability and reliability is paramount. Data pipelines must be designed with fault tolerance in mind to maintain continuous operation even in the face of component failures or network issues.

Kafka represents another scalable solution, with its architecture designed to handle large data loads through high-throughput partitioning. Its fault-tolerant storage layer ensures that messages are processed in parallel, allowing for efficient data flow and integration, which is critical for unlocking real-time insights and normalizing data for analytics.

Fault Tolerance in Distributed Systems

In the realm of distributed systems, fault tolerance is a cornerstone for maintaining data integrity and uninterrupted service. Data pipelines must be equipped with mechanisms that ensure continuous operation even when components fail. This includes features for error handling, automatic task retries, data recovery, and data integrity checks.

Ensuring fault tolerance in data pipelines is not just about preventing data loss; it’s about creating a resilient infrastructure that can adapt to and recover from unforeseen failures.

To achieve high fault tolerance, several strategies can be employed:

- Redundancy: Implementing multiple layers of redundancy to handle failures without affecting the overall system performance.

- Replication: Utilizing built-in replication features to maintain data availability in case of node failures.

- Backup: Regularly backing up data to prevent loss and enable quick recovery.

- Monitoring: Extensive monitoring and visibility to detect and address issues promptly.

These strategies form the backbone of a reliable data pipeline, ensuring that data flows are not disrupted by system anomalies or external threats.

Operational Overhead and Real-time Monitoring

Managing real-time data pipelines introduces additional operational overhead compared to traditional batch-oriented ETL processes. Organizations must invest in robust monitoring tools to troubleshoot issues promptly and maintain high availability and reliability.

Performance considerations are paramount, especially when handling large volumes of data or complex processing logic. Efficient resource utilization and minimized processing latency are critical to the success of real-time data processing.

The cost implications of implementing and maintaining a real-time data processing infrastructure are significant. It’s essential to ensure that the benefits of real-time processing justify the investment. Below is a summary of the key considerations:

- Complexity of Real-time Processing

- Data Consistency and Integrity

- Operational Overhead

- Performance Considerations

- Cost

Furthermore, the integration of real-time data processing meets the demand for timely insights, enabling organizations to analyze and act upon data as it is generated.

Advanced Analytics and Data Platform Integration

Seamless Data Management with Modern Platforms

In the realm of data management, the adoption of modern data platforms has become essential. These platforms facilitate the integration of diverse data sources, offering a comprehensive view that is crucial for informed decision-making. The ability to connect to various databases, cloud services, and other data destinations underscores the versatility of these solutions.

Modern data platforms are not just about storage; they are about enhancing the utility of data. By leveraging advanced analytics tools, organizations can extract meaningful insights from their data, driving innovation and improving customer experiences. This is particularly important as businesses strive to stay relevant and competitive.

The trend towards cloud computing has brought about a need for data platforms that offer flexibility, scalability, and accessibility. These platforms align with the shift towards real-time data processing, enabling organizations to act upon data as it is generated, which is a significant advantage in today’s fast-paced environment.

Innovative Tools for Enhanced Insights

The landscape of data analytics is continually evolving, with innovative tools emerging to offer advanced capabilities for insight generation. These tools integrate artificial intelligence (AI) techniques such as machine learning, deep learning, and predictive analytics, enabling analysts to uncover patterns and trends that traditional methods might miss.

By embracing these advanced analytics tools, organizations can not only predict future trends but also optimize business processes through data-driven decisions.

Enhancing data visualization is a critical aspect of conveying insights. Effective communication through clear, concise, and visually appealing visualizations is essential, especially when addressing both technical and non-technical audiences. Here are some key considerations for enhancing data visualization:

- Creating interactive dashboards for exploring insights

- Employing advanced visualization tools for better clarity

- Ensuring the visualizations are accessible to a diverse audience

The integration of AI into the modern data stack has revolutionized the way we approach analytics, marking a convergence of analytics and data science practices. This integration allows for a more comprehensive analysis of large volumes of data, leading to actionable insights that can significantly impact organizational success.

Visibility and Performance Monitoring

In the realm of data management, visibility and performance monitoring are pivotal for ensuring the integrity and efficiency of data pipelines. Tools that provide a clear view into the pipeline’s operations enable data professionals to track key metrics and swiftly detect and address issues, thereby maintaining the health and throughput of the system.

- Monitoring dashboards for real-time analytics

- Alerts for immediate issue detection

- Workflow orchestration and resource allocation

The right set of tools can significantly reduce operational overhead by automating the monitoring process and providing actionable insights into pipeline performance.

Selecting a toolset that aligns with your data pipeline’s specific needs is crucial. It should offer extensive monitoring and visibility, along with features for error handling, data recovery, and validating data integrity. This ensures not only fault tolerance and reliability but also empowers operators to optimize performance based on real-time data.

Conclusion

In the rapidly evolving landscape of data management, efficient data pipelines stand as critical infrastructure for harnessing the power of data. The future of data management is being shaped by the advent of sophisticated tools that offer seamless integration, real-time processing, and augmented management capabilities. As organizations grapple with the increasing volume and complexity of data, the right data pipeline solution can be a game-changer, offering scalability, fault tolerance, and operational efficiency. The integration of automation, data governance, and security within these pipelines ensures that data integrity and compliance are maintained throughout the data lifecycle. As we look ahead, it is clear that the organizations that prioritize and invest in building efficient data pipelines will be well-equipped to drive innovation, make informed decisions, and maintain a competitive edge in the data-driven world of tomorrow.

Frequently Asked Questions

What are the key considerations when selecting a data pipeline tool?

When selecting a data pipeline tool, it’s important to consider features that optimize workflows, such as automation and orchestration capabilities, native data integration, scalability, performance, and ease of use. Ensuring the tool aligns with your organization’s data-driven goals and strategic initiatives is also crucial.

How do native data integration capabilities enhance data pipelines?

Native data integration capabilities provide a fast and efficient way to ingest, engineer, and maintain data from various sources, including cloud-based data stores. Features like drag-and-drop interfaces reduce the need for coding skills and simplify the data integration process, while automation ensures data remains up-to-date.

Why is data governance and security important in data pipeline solutions?

Data governance and security are critical in data pipeline solutions to ensure data integrity, compliance with regulations, and protection against breaches. These measures maintain trust in the data and safeguard sensitive information throughout the data lifecycle.

What role does real-time data processing play in modern data management?

Real-time data processing allows organizations to analyze data as it is being generated, providing near-instantaneous insights for quick decision-making and proactive responses. This capability is essential for staying competitive in a fast-paced business environment.

How are cloud-based data pipelines transforming the data management landscape?

Cloud-based data pipelines offer flexibility, scalability, and ease of access, which are essential for managing large and complex data sets. The shift towards cloud computing facilitates streamlined and scalable data integration processes, meeting the demands of modern data management.

What are the benefits of integrating advanced analytics with data platforms?

Integrating advanced analytics with data platforms enables seamless data management, providing a unified view of data for better decision-making. It also allows for the use of innovative tools that can deliver enhanced insights and drive strategic business outcomes.