Table of Contents

Azure Data Lake is a robust and scalable solution for big data analytics that simplifies data management, governance, and advanced analytics execution. It integrates seamlessly with the Azure ecosystem, including AI and machine learning services, to empower organizations to derive meaningful insights from their vast data collections. This article explores the architecture, analytics capabilities, application development, security, and performance optimization in Azure Data Lake, providing a comprehensive guide for harnessing its power for advanced analytics.

Key Takeaways

- Azure Data Lake simplifies complex data processing and analytics, allowing for management of diverse data types at scale and integration with Azure’s advanced analytics services.

- Advanced analytics can be executed directly on data stored in Azure Data Lake using big data frameworks like U-SQL, Spark, and Hadoop, without the need for data movement.

- Developers can build intelligent applications with Azure Data Lake by leveraging integrated Azure AI and Machine Learning services for predictive analytics, NLP, and image recognition.

- Azure Data Lake ensures top-notch security and compliance with features like data encryption, compliance certifications, and robust identity management and access control.

- Cost and performance optimization in Azure Data Lake are achieved through scalable and reliable storage solutions, cost-effective data strategies, and performance tuning techniques.

Understanding Azure Data Lake Architecture

Core Components and Storage Solutions

Azure Data Lake is designed to store and analyze vast amounts of data in a scalable and secure manner. The core components of Azure Data Lake include a variety of storage solutions tailored to different data types and usage patterns. These solutions ensure that data is not only stored efficiently but is also readily accessible for analytics purposes.

- Azure Data Lake Storage: Scalable, secure data lake for high-performance analytics.

- Azure Blob Storage: Optimized for storing massive amounts of unstructured data.

- Azure Files: Provides simple, secure, and serverless enterprise-grade cloud file shares.

- Azure NetApp Files: Offers enterprise-grade file shares, powered by NetApp.

- Azure Disk Storage: Delivers high-performance, highly durable block storage.

Azure Data Lake integrates advanced security measures to prioritize the safety and integrity of your data, offering a customizable analytics experience.

Cost management is a critical aspect of Azure Data Lake, with pricing based on the storage you use and the transactions you perform. This flexible model supports a schema-less design, allowing you to adapt your data as your application evolves, while ensuring secure access through shared access signatures and encryption at rest.

Data Management and Governance

Effective data management and governance are critical for ensuring the integrity and security of data within Azure Data Lake. Centralizing data governance allows for consistent policy application and streamlines management across diverse data and analytic models. Azure Data Lake’s governance services include features like stream aggregators and deep linking, which facilitate the organization and retrieval of data.

- Ensure GDPR compliance and manage user access with a comprehensive data catalogue.

- Implement built-in security policies to protect data integrity.

- Expose data through endpoints to support downstream solutions such as ML and AI.

By establishing robust data management and governance protocols, organizations can accelerate innovation while maintaining compliance and security standards.

Partitioning and compaction strategies are essential for optimal table designs in data lakes, ensuring efficient data modeling and storage. With Azure Data Lake, IT teams can centrally manage permissions and automatically apply data sensitivity labels, democratizing data access and transformation at scale.

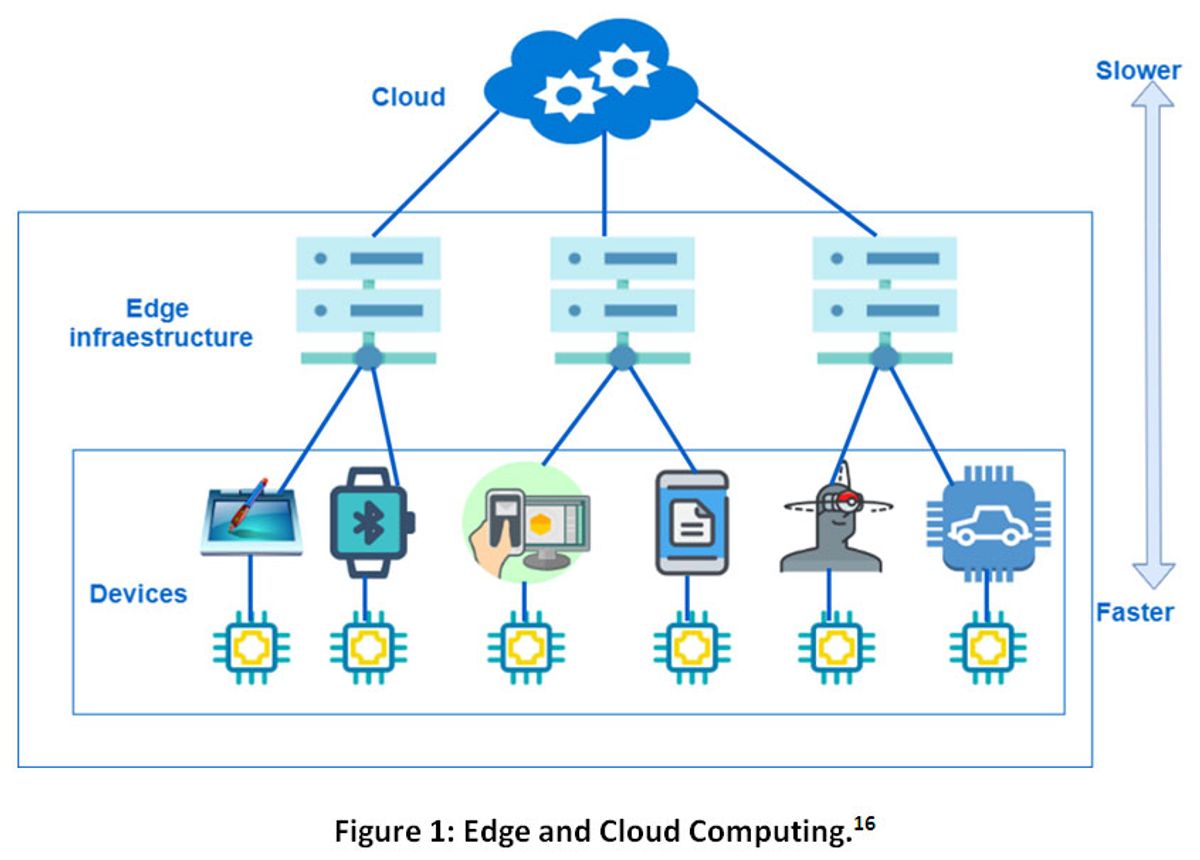

Integration with Azure Ecosystem

Azure Data Lake’s integration with the broader Azure ecosystem is a pivotal aspect of its architecture, enabling a seamless connection between storage solutions and advanced analytics services. Azure Data Lake works in conjunction with services such as Azure Machine Learning, Power BI, and Azure IoT Edge, providing a comprehensive environment for data processing and analysis.

- Azure Active Directory External Identities: Consumer identity and access management in the cloud

- Microsoft Entra Domain Services: Manage your domain controllers in the cloud

- Logic Apps: Automate the access and use of data across clouds

- Service Bus: Connect across different messaging protocols

The integration capabilities extend to DevOps and developer tools, ensuring that data workflows can be automated and optimized for efficiency. For instance, Azure DevOps and Azure Monitor offer tools for code sharing, work tracking, and full observability into applications, which are essential for maintaining a robust data analytics pipeline.

By enabling Integration Runtime on Azure, organizations can configure Azure Integration Runtime within Azure Data Factory instances, ensuring connectivity to data stores and the smooth operation of data pipelines.

Executing Advanced Analytics on Azure Data Lake

Leveraging Big Data Frameworks

Azure Data Lake Storage (ADLS) is designed to handle massive volumes of data in a variety of formats, enabling businesses to run big data analytics workloads directly on the data stored within. By leveraging frameworks such as U-SQL, Spark, and Hadoop, Azure Data Lake simplifies the complex task of processing and analyzing large datasets.

Azure’s integration with big data frameworks allows for a seamless development experience, with tools and extensions available for environments like Visual Studio. This integration not only streamlines the analytics process but also empowers developers to build and manage jobs using familiar interfaces.

The following table outlines the key big data frameworks supported by Azure Data Lake and their primary use cases:

| Framework | Primary Use Case |

|---|---|

| U-SQL | Data processing and querying |

| Spark | In-memory processing and analytics |

| Hadoop | Distributed storage and processing |

By utilizing these frameworks, organizations can develop intelligent applications that provide actionable insights, enhancing their competitive edge in the market.

Real-time Analytics and Processing

Azure Data Lake is equipped to handle real-time analytics and processing, enabling businesses to gain instant insights and respond promptly to emerging trends. This capability is crucial for applications that require immediate data analysis, such as Internet of Things (IoT) monitoring, live dashboards, and anomaly detection systems.

- Azure Stream Analytics facilitates the real-time preparation and analysis of streaming data, integrating seamlessly with Azure Data Lake.

- Anomaly detection is automated within the Analytics Dashboard, highlighting unexpected patterns and driving actionable insights.

- Azure Data Factory (ADF) supports real-time data integration, allowing for the streaming ingestion and processing of data.

By scaling compute and storage resources on-demand, Azure Data Lake ensures that large volumes of data and complex analytical workloads are managed efficiently, without compromising performance.

The integration with Azure services, such as Azure Synapse Analytics, enhances the analytics lifecycle from data preparation to advanced reporting, creating a robust environment for real-time data operations.

Utilizing U-SQL, Spark, and Hadoop

Azure Data Lake integrates seamlessly with a suite of powerful analytics tools, including U-SQL, Spark, and Hadoop, to handle diverse and complex data workloads. U-SQL combines the familiarity of SQL with the expressive power of C#, providing a unique querying experience. Spark and Hadoop, on the other hand, offer robust frameworks for big data processing and analytics.

- Ingest Data: Built-in connectors and integration runtimes simplify data ingestion from a variety of sources.

- Transform Data: SQL and Spark-based transformations ensure data quality and consistency.

- Load Data: Flexible loading options allow for efficient data storage and analysis.

By leveraging these tools, organizations can perform analytics workloads directly on data stored in ADLS, enhancing the ability to process and analyze large datasets without data movement.

The integration of Azure Data Lake with these analytics engines enables the development of intelligent applications. Utilizing Azure AI and Machine Learning services, developers can build applications that provide insightful analytics and intelligent services.

Developing Intelligent Applications with Azure Data Lake

Integration with Azure AI and Machine Learning

Azure Data Lake seamlessly integrates with Azure AI and Machine Learning services, enabling the development of sophisticated applications that can learn and adapt over time. The integration allows for the creation of custom ML models and the leveraging of pre-built AI services, enhancing the capabilities of data-driven solutions.

- Azure Machine Learning: Build, train, and deploy models from the cloud to the edge.

- Azure AI Studio: Develop generative AI solutions and custom copilots.

- Azure AI Search: Implement enterprise-scale search for app development.

By utilizing Azure’s AI and ML services, developers can infuse applications with intelligence, automate complex processes, and derive deeper insights from their data lakes. This empowers organizations to stay ahead in a rapidly evolving technological landscape.

The Azure ecosystem provides a variety of tools to support these integrations, such as Azure AI Translator for machine translation, Azure AI Vision for insights from images and videos, and Azure AI Document Intelligence for accelerated information extraction. These tools are designed to be accessible, with simple REST API calls for implementation, ensuring that developers can focus on innovation rather than infrastructure.

Building Predictive Analytics Models

Building predictive analytics models within Azure Data Lake involves a series of steps that transform raw data into actionable insights. Data scientists can perform tasks such as predictive modeling, anomaly detection, customer segmentation, and recommendation systems, all within the Azure ecosystem. The process typically begins with data ingestion from various sources, followed by data transformation to prepare it for modeling.

The integration of Azure Data Lake with Azure AI and Machine Learning services streamlines the development of predictive models, enabling organizations to forecast trends and behaviors effectively.

The model development phase leverages tools like Synapse Notebooks or SQL scripts, utilizing libraries such as MLlib or TensorFlow. Once the models are trained and evaluated, they can be deployed from the cloud to the edge, ensuring that insights are generated where they are most needed. Azure’s suite of analytics services, including Azure Analysis Services, Azure Data Lake Storage, and Azure Data Explorer, provides a robust environment for building, training, and deploying models.

To ensure the models are integrated seamlessly with AI services, it’s crucial to manage the resources involved in the solution and deploy them safely and responsibly. This holistic approach to predictive analytics empowers healthcare organizations to predict disease outbreaks, allocate resources efficiently, and manage population health more effectively.

Creating Natural Language Processing and Image Recognition Services

Azure Data Lake’s integration with advanced AI capabilities facilitates the creation of sophisticated services that can understand and interpret human language and visuals. Natural Language Processing (NLP) and Image Recognition services are at the forefront of transforming data into actionable insights. These services enable businesses to automate complex tasks such as sentiment analysis, content moderation, and visual identification.

- Sentiment Analysis Services

- Voice Recognition and Processing Services

- Translation and Localization Services

- Workflow Automation Services

- Computer Vision Systems

By leveraging Azure’s cognitive services, developers can build applications that not only see and hear but can also understand and interact with the world in a more human-like manner.

The integration with Azure AI and machine learning platforms ensures that these services are not only powerful but also easy to implement and scale. This seamless connectivity allows for the continuous enhancement of applications, making them more intelligent over time.

Ensuring Security and Compliance in Azure Data Lake

Data Encryption and Protection

Ensuring the security of data within Azure Data Lake involves a comprehensive approach to encryption and protection. Azure Data Lake employs sophisticated cryptographic techniques to safeguard every layer of the data flow. This includes encrypting data at rest and in transit, using mechanisms such as Transparent Data Encryption (TDE) and Transport Layer Security (TLS).

The service’s encryption capabilities are designed to be seamless, providing robust security without compromising on performance. Data is automatically encrypted before it is stored, and it is decrypted upon retrieval, ensuring that data is protected throughout its lifecycle.

Azure Data Lake’s encryption models are built to offer flexibility and control, allowing organizations to meet their specific security requirements while benefiting from the scalability and reliability of the cloud.

The following list outlines key aspects of Azure Data Lake’s data protection strategy:

- Encryption at rest and in transit

- Splitting and distributing data for additional security

- Utilizing state-of-the-art cryptographic techniques

- Ensuring secure connections with TLS

By implementing these measures, Azure Data Lake provides a secure environment for organizations to store and process sensitive data, including Protected Health Information (PHI), with the necessary care and compliance.

Compliance Standards and Certifications

Ensuring adherence to compliance standards and certifications is a cornerstone of Azure Data Lake’s security framework. Azure’s commitment to regulatory compliance is reflected in its support for a wide array of international and industry-specific standards. This robust compliance posture helps organizations meet their legal and regulatory obligations with greater ease.

Azure Data Lake integrates with Azure Policy Regulatory Compliance controls, providing a comprehensive list of compliance domains and security controls tailored for Azure Data Lake Storage Gen1. This integration streamlines the compliance process, making it transparent and manageable for enterprises.

Azure’s compliance with frameworks such as HIPAA for healthcare, PCI DSS for payment processing, and FedRAMP for government data, among others, ensures that companies can trust the platform to safeguard their sensitive data and maintain compliance with critical industry regulations.

The platform’s certifications, including HIPAA and various ISO standards like ISO 27017, ISO 27018, ISO 27001, and ISO 27701, demonstrate Microsoft’s dedication to security, compliance, and privacy. These certifications are not just badges of honor but are indicative of the stringent measures in place to protect customer data.

Identity Management and Access Control

In the realm of Azure Data Lake, identity management and access control are pivotal for safeguarding data and ensuring that only authorized personnel have access to sensitive information. Azure Active Directory (AAD) plays a central role in managing identities and providing access controls, which are essential for maintaining a secure data environment.

- Azure Active Directory External Identities: Consumer identity and access management in the cloud.

- Microsoft Entra Domain Services: Manage your domain controllers in the cloud.

Effective identity management ensures clear domain separations and is designed to be future-proof, remaining relevant as technology evolves.

It is crucial to establish robust policies and procedures that define clear boundaries between different domains or areas of responsibility within the system. This not only prevents unauthorized access but also facilitates incident response and forensics, should a security breach occur.

Optimizing Costs and Performance in Azure Data Lake

Scalability and Reliability

Azure Data Lake is engineered to handle the ever-growing data demands of modern businesses. It seamlessly scales to accommodate petabytes of data, ensuring that your storage capabilities grow with your needs. This scalability is not just about capacity; it also encompasses the system’s ability to maintain performance and reliability under varying loads.

- Scalability: Adapts to increasing or decreasing data loads without service interruption.

- Durability: Maintains multiple data copies to prevent loss.

- Availability: Provides redundancy options for continuous data access.

Azure Data Lake’s architecture is designed to be both robust and flexible, offering a foundation that supports a wide range of data types and analytics solutions.

Cost considerations are also integral to the platform’s design. Azure Data Lake ensures that you only pay for the storage and transactions you use, making it a cost-effective solution for businesses of all sizes. By optimizing for O(1) time complexity operations, data retrieval remains swift and efficient, even as data volumes expand.

Cost-effective Data Storage Strategies

Azure Data Lake Storage (ADLS) is designed to be a cost-efficient option for storing large volumes of data. By only charging for the storage used and the transactions performed, ADLS provides a competitive pricing model that includes multiple storage tiers—hot, cool, and archive—to optimize costs based on data access patterns.

To further manage costs, organizations can leverage Azure’s best practices, such as choosing the correct resources and using Delta Lake for optimized data management. Simplifying data management is also crucial, as ADLS supports a hierarchical file system that is more manageable than flat storage systems, especially for large datasets.

Azure Data Lake’s scalability ensures that it can grow with your organization, providing the necessary resources to store and analyze data efficiently.

Azure offers various storage solutions tailored to different needs, including Archive Storage for rarely accessed data, Azure Elastic SAN for a cloud-native SAN experience, and Azure Container Storage for stateful container applications.

Performance Tuning and Optimization Techniques

Optimizing performance within Azure Data Lake is crucial for maintaining efficient data processing and analytics. Proactive performance tuning ensures that resources are utilized effectively, leading to faster insights and better cost management. Key areas to focus on include query optimization, resource scaling, and data partitioning strategies.

- Query optimization involves refining SQL queries to reduce latency.

- Resource scaling adjusts compute power based on workload demands.

- Data partitioning strategies help manage large datasets by dividing them into manageable chunks.

By regularly reviewing and adjusting these areas, organizations can maintain peak performance and avoid potential bottlenecks.

It’s also important to leverage Azure’s native tools and services for monitoring and diagnostics. These tools provide valuable insights into system performance and help identify areas for improvement. Additionally, referencing optimization recommendations from Microsoft Learn can guide best practices for specific Azure services like Azure Databricks.

Conclusion

In summary, Azure Data Lake stands as a robust and versatile platform that simplifies the complexities of big data analytics. By providing a comprehensive suite of tools for data ingestion, storage, and analysis, it enables organizations to handle diverse datasets with ease. The integration with Azure’s AI and Machine Learning services allows for the development of intelligent applications that can derive actionable insights, while its security features ensure data protection and compliance. Azure Data Lake’s seamless integration with other Azure services, such as Azure Machine Learning and Power BI, further enhances its capabilities, making it an indispensable asset for businesses looking to leverage advanced analytics to drive innovation and growth. As the data landscape continues to evolve, Azure Data Lake is well-positioned to meet the current and future demands of enterprises seeking to maximize the value of their data assets.

Frequently Asked Questions

What are the core components of Azure Data Lake?

Azure Data Lake’s core components include a scalable storage solution that supports big data analytics, integration with various Azure services for data processing and analytics, and built-in management and governance tools.

How does Azure Data Lake integrate with the Azure ecosystem?

Azure Data Lake integrates with Azure services like Azure Machine Learning, Power BI, and various big data frameworks such as U-SQL, Spark, and Hadoop to provide a comprehensive analytics platform.

Can Azure Data Lake handle real-time analytics?

Yes, Azure Data Lake supports real-time analytics and processing by leveraging big data frameworks and tools that allow for the quick analysis of data as it’s being ingested.

What security measures does Azure Data Lake offer?

Azure Data Lake provides robust security features, including data encryption at rest and in transit, compliance with various standards and certifications, and comprehensive identity management and access control.

How can Azure Data Lake help in developing intelligent applications?

Azure Data Lake is integrated with Azure AI and Machine Learning services, enabling the development of applications with capabilities like predictive analytics, natural language processing, and image recognition.

What are some strategies for optimizing costs and performance in Azure Data Lake?

To optimize costs and performance in Azure Data Lake, you can implement scalability and reliability measures, use cost-effective data storage strategies, and apply performance tuning and optimization techniques.